Tech leaders ranging from Sam Altman to Elon Musk have warned of the risks emanating from AI. While it might seem like genuine concern for humanity, there could also be a not-so-altruistic reason why AI executives frequently warn of AI taking over the world.

According to a Bloomberg report, AI was the “central theme” of last week’s Bloomberg Technology Summit.

That might not come as a surprise as AI has emerged as the hottest trend in the tech industry.

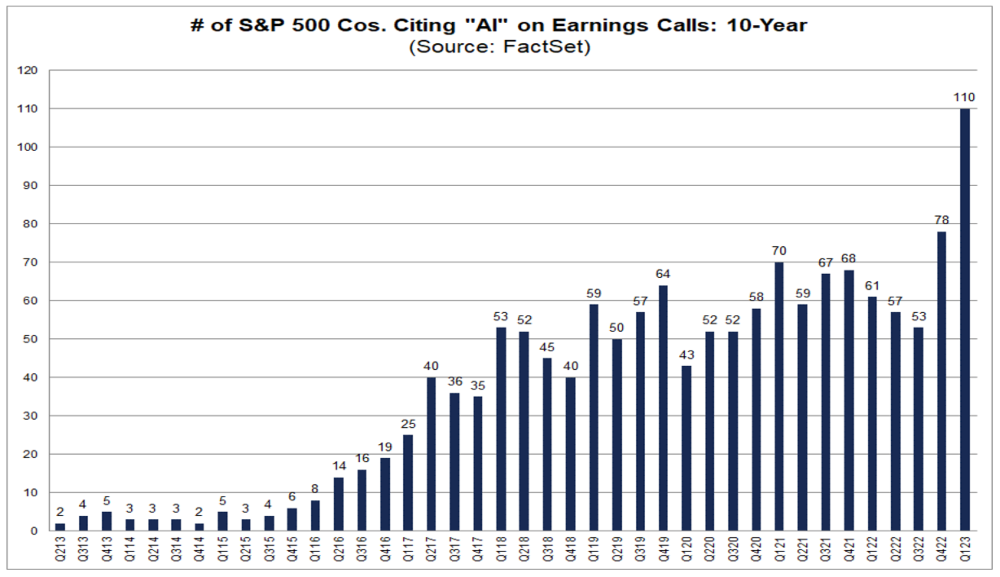

According to FactSet, 110 S&P 500 companies used the word “AI” in their Q1 2023 earnings call – which is over thrice the ten-year average and the highest level since 2010.

US companies’ obsession with AI is again not hard to comprehend. Investors have poured money into listed AI stocks this year and C3.ai which is among the rare pure-play listed AI play has tripled this year – so has Nvidia whose chips are crucial for building AI models.

The optimism towards AI has led to a broad-based rally in US tech stocks this year with the Nasdaq 100 adding $4 trillion to its market cap.

While tech leaders see AI as a massive opportunity many have been warning of AI risks – including warning of it being an “existential risk” for humanity.

Tech Leaders Warn AI is Taking Over the World

Musk is among the tech leaders who have been constantly warning about AI risks. Speaking at the Wall Street Journal CEO Summit last month, the Tesla CEO said, “It’s a small likelihood of annihilating humanity, but it’s not zero,” while adding there is a “non-zero chance of [AI] going full ‘Terminator’.”

The billionaire added, “I don’t think AI will try to destroy all of humanity, but it might put us under strict control.”

To be sure, Musk has warned of AI risks before also and termed it “dangerous.” He has been lobbying aggressively for AI regulations and in April met Senate Majority Leader Chuck Schumer and other US lawmakers to discuss AI regulations.

After his meeting, the White House courted CEOs of OpenAI, Google, Microsoft, and Anthropic – where they discussed AI with Vice President Kamala Harris.

The meeting was followed by Altman’s testimony before the Senate where he admitted that AI can “go quite wrong” – something the OpenAI CEO reiterated at last week’s Bloomberg Tech Summit.

Musk was incidentally among the tech leaders that wrote an open letter calling for a halt on AI models that are more powerful than GPT-4.

It’s Not All Altruism About Calls to Regulate AI

Meanwhile, Palantir CEO Alex Karp lashed out at those calling for a halt on AI models and said they are doing so as they are lagging behind and don’t have any product ready.

$PLTR Alex Karp has a different view from @elonmusk on pausing AI.

From today’s letter:

“Some have called for a pause on efforts to develop even more powerful versions of these emerging technologies, hoping perhaps that our adversaries will join us in considered reflection on…— Chad (@PalantirChad) April 7, 2023

According to Karp, these people want a pause to study AI in that timeframe and develop their own products. Musk for instance is working on his startup TruthGPT and said that the industry needs a “third horse in the race” to take on ChatGPT and Google Bard.

The Bloomberg report lists another reason why AI leaders across the board have been warning of technology going rogue and said, “if they make a compelling case that so much depends on handling AI correctly, other aspects of running the business, such as raising money, attracting customers and recruiting the most in-demand engineers, become easier.”

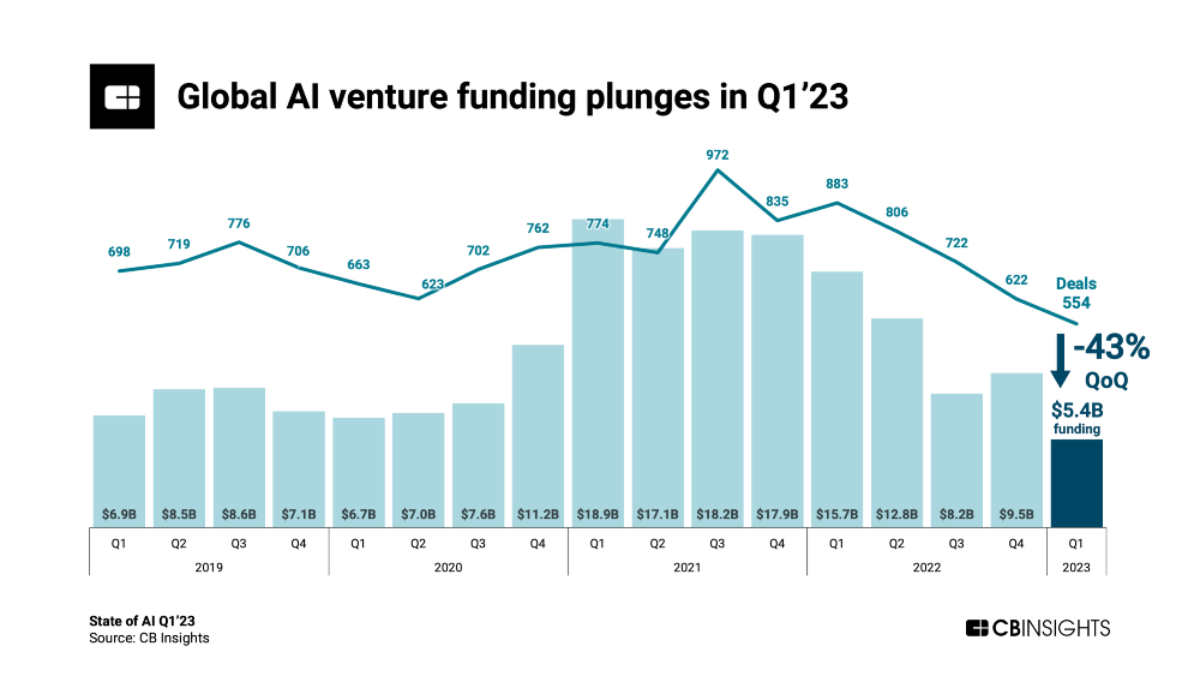

Notably, global AI venture funding plunged in Q1 2023 in line with the broader slump in venture capital (VC) funding.

According to Crunchbase, global VC funding into AI startups fell 43% YoY to $5.4 billion in the first quarter of the year.

Crunchbase noted that eight of the 38 unicorns in the first five months of this year are AI companies. Builder.ai, CoreWeave, and Anthropic are among the AI companies that raised funds in May.

Countries Are Looking to Regulate Artificial Intelligence

All said the concerns over AI risks are not unfounded as is visible in some of the recent incidents including one where an AI-generated fake image showed a blast near the Pentagon – leading to a momentary crash in US stocks.

Regulators in most developed countries are contemplating AI regulations and the European Union is expected to soon come up with rules governing the technology.

UK’s Competition and Market Authority is also investigating AI while the country’s Prime Minister Rishi Sunak has said that the UK would hold a global summit on AI regulations in the fall of this year.

At his keynote address at the London Tech Week earlier this month, Sunak said the country would work for global cooperation on AI regulations and stressed: “AI doesn’t respect traditional national borders.”

Related Stock News and Analysis

What's the Best Crypto to Buy Now?

- B2C Listed the Top Rated Cryptocurrencies for 2023

- Get Early Access to Presales & Private Sales

- KYC Verified & Audited, Public Teams

- Most Voted for Tokens on CoinSniper

- Upcoming Listings on Exchanges, NFT Drops