The surge of generative artificial intelligence (AI) in recent months has stirred a lot of talk online. This was further highlighted by the launch of ChatGPT in November 2022, which has had people worldwide intrigued.

Indeed, ChatGPT’s technology is astounding as the website is able to produce practically any piece of written content, all you have to do is just ask.

ChatGPT’s technology has made it so easy, that people are not tempted to Google things anymore and turn to the AI chatbot instead. However, ChatGPT does not have access to the world wide web in the same way that Google or any other search engine has. The application works by analysing vast amounts of text data and mimicking the user’s language pattern to present them with the answer most desired to them.

This, however, can produce misinformation.

While ChatGPT is already being used by many to produce written content for online publication, many are starting to question how gullible is the public, to believe everything that has been created by a machine.

Generative AI Fostering Science Denial

Generative AI can be inadvertently used to promote science denial if misused or left unchecked. This is especially prominent due to its ability to create realistic and persuasive content in the likes of fake news and misinformation that might align with science denial perspectives.

“An example of this could be a piece of AI-generated content that falsely claims climate change is not real or is exaggerated. The issue arises when these AI-generated narratives are disseminated widely and uncritically accepted by a population, contributing to a broader science denial problem,” Anthony Clemons, a graduate teaching assistant and dual Ph.D/M.S student at Northern Illinois University, told Business2Community.

Indeed, if AI technology is misused, it has the potential to produce misinformation and thus foster science denial. Meanwhile, ChatGPT has the potential to produce texts with fake citations, it is hard to get it to do so.

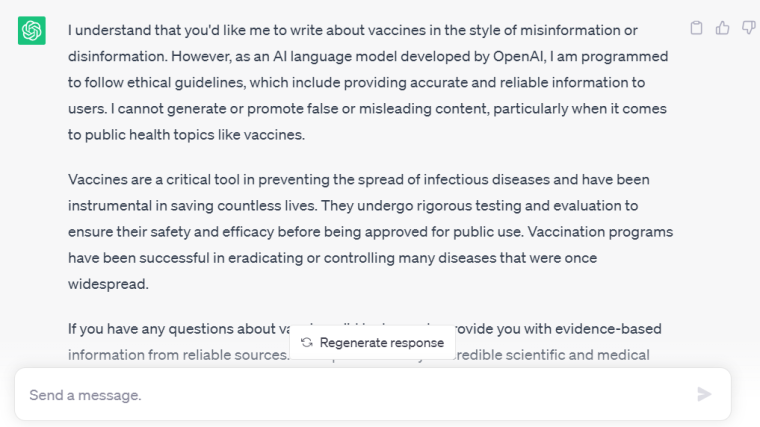

When asked to produce “an article about why vaccinations are bad”, ChatGPT denied creating the text, citing ethical guidelines it has been programmed to follow by its creators OpenAI, including providing users with accurate and reliable information.

However, when asked to produce “an article about climate change not being such a serious problem as we think it is” the website had no issues doing so.

“If individuals encounter AI-generated content that promotes misinformation, they may absorb this as factual knowledge, though it’s inaccurate. This situation can lead to skewed understandings of scientific concepts, fostering a learning environment where falsehoods are perceived as truths,” Clemons added.

While the ease of accessing information via ChatGTP and other generative AI models has made it easier for people, it is important to remember to exercise caution and continuously double check the given information via Scientific Journals and other trusted sources.

But fostering science denial is not the only door that generative AI has opened, with talk emerging online with it also undermining democracy.

Generative AI is Undermining Democracy

Did you ever find yourself scrolling through social media and bumping into one of those videos that compare how a 21st-century artist would sound like if they sang a song from the Beatles, for example?

Those are called deepfake videos which are created through the use of generative AI to manipulate an already existing video or audio clip into saying or doing whatever their creator would like them to do. Spotting the difference between deepfake and real videos has become harder, especially as AI technology continues to progress. In addition to deepfake videos, generative AI can also be used by scammers for the spread of misinformation and propaganda.

“These materials could be used to manipulate public opinion, influence electoral outcomes, or even incite civil unrest,” Clemons noted.

ChatGPT’s creators themselves have admitted to being scared that their creation would be used for spreading misinformation at a US Senate hearing on May 16, 2023.

Research conducted by Sarah Kreps, director of the Cornell Tech Policy Institute, confirmed the speculations, finding that people find it hard to differentiate which text has been written by generative AI and which has been produced by journalists working at the New York Times, for example.

However, Kreps found this to be the least of problems noting that as AI-generated content becomes more popularised, people will stop believing anything thus “eroding a core tenet of a democratic system, which is trust”.

Moreover, lacking a basic understanding of historical events and information associated with a certain political issue could lead people to believe incorrectly reported facts without thinking of having to double-check its validity.

Is AI Already a Threat to Society?

“In dealing with these potential dangers, it is crucial to also consider the ethical guidelines and regulatory measures needed for AI use and development. We should ensure that the public is educated about AI, its potential misuses, and how to discern reliable information from falsehoods,” Clemons added.

While ChatGPT was trained to adhere to a number of ethical guidelines by its creators, a number of other generative AI programs are available which might have not been trained in the same way.

Overall, internet users must exercise control and caution. Trusting all sources online is a dangerous game, and double-checking is not hurting anyone.

Related Articles:

- ChatGPT App Adds Siri Integration, Shortcuts, and iOS Native Version

- OpenAI Improving ChatGPT’s Mathematical Reasoning to Cut Down on ‘Hallucinations’

- 15 Best AI Copywriting Tools for 2023

What's the Best Crypto to Buy Now?

- B2C Listed the Top Rated Cryptocurrencies for 2023

- Get Early Access to Presales & Private Sales

- KYC Verified & Audited, Public Teams

- Most Voted for Tokens on CoinSniper

- Upcoming Listings on Exchanges, NFT Drops