Corporate AI leaders have met with President Joe Biden to discuss AI safety as part of a voluntary agreement.

In a Friday meeting with President Biden, the leaders of America’s biggest AI-focused companies, including Meta, Amazon, Google, Microsoft, Anthropic, Inflection AI, and OpenAI, pledged to continue their efforts in ensuring the safety of AI.

However, there are concerns that the voluntary agreement between the Biden administration and leading AI companies may be more of a symbolic gesture rather than aggressive regulation.

Today’s announcement by President Biden of an agreement to establish industry standards in AI development is a helpful step forward on AI in the short term.

In the long term, history has shown we will need more than voluntary commitments from industry leaders. 1/ https://t.co/pKzLEfUZ50

— Rep. Don Beyer (@RepDonBeyer) July 21, 2023

Many tech billionaires have become fixated on hypothetical doomsday scenarios in which AI becomes fixated on a specific goal and triggers human extinction in its pursuit, Forbes’s Johanna Costigan wrote.

She claimed their fascination with these stories is interconnected with their belief in longtermism, a philosophy that urges the making of short-term trade-offs for the sake of humanity’s long-term well-being, adding:

“Conveniently, this concept allows them to continue developing profitable technological applications while claiming paternalistic, karmic excellence: they are not only bestowing life-optimizing tech to the masses, they are also single handedly ensuring the tech they make doesn’t go rogue and kill us all.”

President Biden’s acknowledgment that these executives are critical for making sure AI develops “with responsibility and safety by design” also demonstrates that the White House reflects this narrative.

Costigan claimed that by adhering to the ideals of “AI safety” as defined by tech companies, issues such as copyright infringement and the presence of sexist and racist algorithms are being ignored.

These immediate challenges posed by AI are dismissed as mere risks, overshadowed by grandiose hypothetical threats, she wrote.

The term “AI safety” is deliberately vague, allowing different stakeholders to interpret it in their own ways.

It can refer to job security, trust in algorithms, or the confidence that facial recognition won’t be abused.

However, the focus on creating “safety” in AI neglects the fact that the data on which AI relies remains entirely unregulated in the United States. This lack of regulation has given AI companies the freedom to design safety standards that align with their own moral and financial interests.

Competition From China Prompts US Government to Avoid Stiff Regulation

China’s domestic development of AI has further intensified the US government’s inclination to step aside in order to allow perceived innovation to thrive, Costigan said.

She noted that while China may have stricter regulations on technology, the average US citizen is more vulnerable to encountering unmarked deepfakes.

In democratic countries, where information shapes public opinion and dictates election outcomes, synthetic images and text generated by AI pose a significant threat.

The recent meeting between the White House and tech CEOs shows a shared commitment to secure AI development, but without data privacy regulations, attempts to make AI safe are akin to “regulate the drinking of wine in restaurants—but not the commercial process of turning grapes into wine.”

Voluntary measures and general commitments are a step in the right direction, but more concrete guidelines and specific plans need to be implemented.

Companies such as Anthropic have announced their intention to share more specific cybersecurity measures and responsible scaling plans.

All in all, while the efforts of AI industry leaders to address safety concerns are commendable, there is a need for greater scrutiny and accountability.

The dominance of these companies in shaping the narrative around AI risks and safety has allowed them to evade immediate challenges such as data privacy and algorithmic biases.

AI Regulation Finds Momentum Amid Growing Adoption

Last month, a bipartisan group of lawmakers in the US introduced the National AI Commission Act, which aims to create a blue-ribbon commission to explore the regulation of AI.

The proposal would establish a 20-member commission to study AI regulation, including how responsibility for regulation is divided between agencies, the capacity of such agencies to regulate, and ensuring that enforcement actions are aligned.

Members of the commission will come from civil society, government, industry, and labor and will not be dominated by one sector.

The move came as the skyrocketing popularity of AI tools has led to increasing calls for regulations.

As reported, the European Union and the US announced collaborating to develop a voluntary code of conduct for AI. Officials will seek feedback from industry players and invite parties to sign up to curate a proposal for the industry to commit to voluntarily.

Likewise, the EU has been working on its Artificial Intelligence Act, a sprawling document in the works, for around two years. The legislation aims to classify and regulate AI applications based on their risk.

These attempts to regulate AI come as the technology is well on the path to finding mainstream adoption.

According to a recent survey from the Pew Research Center, 27% of Americans interact with AI on a daily basis.

The survey found that another 28% of individuals encounter AI about once a day or several times a week.

Furthermore, almost all businesses (97%) expect to use AI to improve their efficiencies and cut costs.

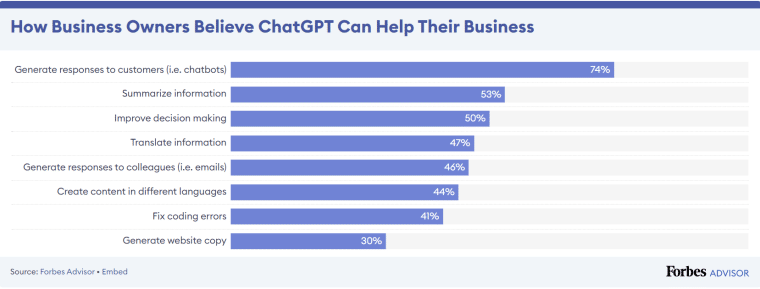

Many businesses see promise in the usefulness of AI, such as streamlining email communications with colleagues (46%), generating website copy (30%), fixing coding errors (41%), translating information (47%), and summarizing information (53%).

Read More:

- 50+ ChatGPT Statistics on Usage & Revenue for April 2023

- Best AI Stocks to Invest in 2023

- Best AI Crypto Tokens & Projects to Invest in 2023

What's the Best Crypto to Buy Now?

- B2C Listed the Top Rated Cryptocurrencies for 2023

- Get Early Access to Presales & Private Sales

- KYC Verified & Audited, Public Teams

- Most Voted for Tokens on CoinSniper

- Upcoming Listings on Exchanges, NFT Drops