The democratic world has already gotten its first taste of ai-generated election deepfakes and it’s only the beginning. Earlier in 2023 a video of Chicago mayoral candidate Paul Vallas circulated on the internet.

On the surface, the video appeared to show Vallas complaining against the rampant crime and lawlessness in Chicago, even saying at one point that “no one would bat an eye” if police killed 17 or 18 people and suggesting that we should start “refunding the police.”

First time I’ve seen this – Twitter account pops up, tweets a video of a political candidate (Paul Vallas) saying something with what seems like a fake, AI generated voice

The fake was sniffed out quickly in the replies, and then the account + tweet were promptly deleted pic.twitter.com/mRjjuVvQGo

— Jake Lewis (@jake____lewis) February 27, 2023

To those who have heard AI-generated voices before, this one was rather easy to point out as a fake. The tone of his voice doesn’t make sense with what he is saying.

It falls into the uncanny valley, a phenomenon that explains the feeling you get when something is almost right but it’s so slightly off in an unusual way that it’s unsettling.

This video was luckily called out for what it was in the comments and was quickly reported and removed from the platform. However, it was still widely circulated before it was taken down and it’s possible that it changed the minds and votes of some Chicago voters.

What’s Next For Election AI Deepfakes?

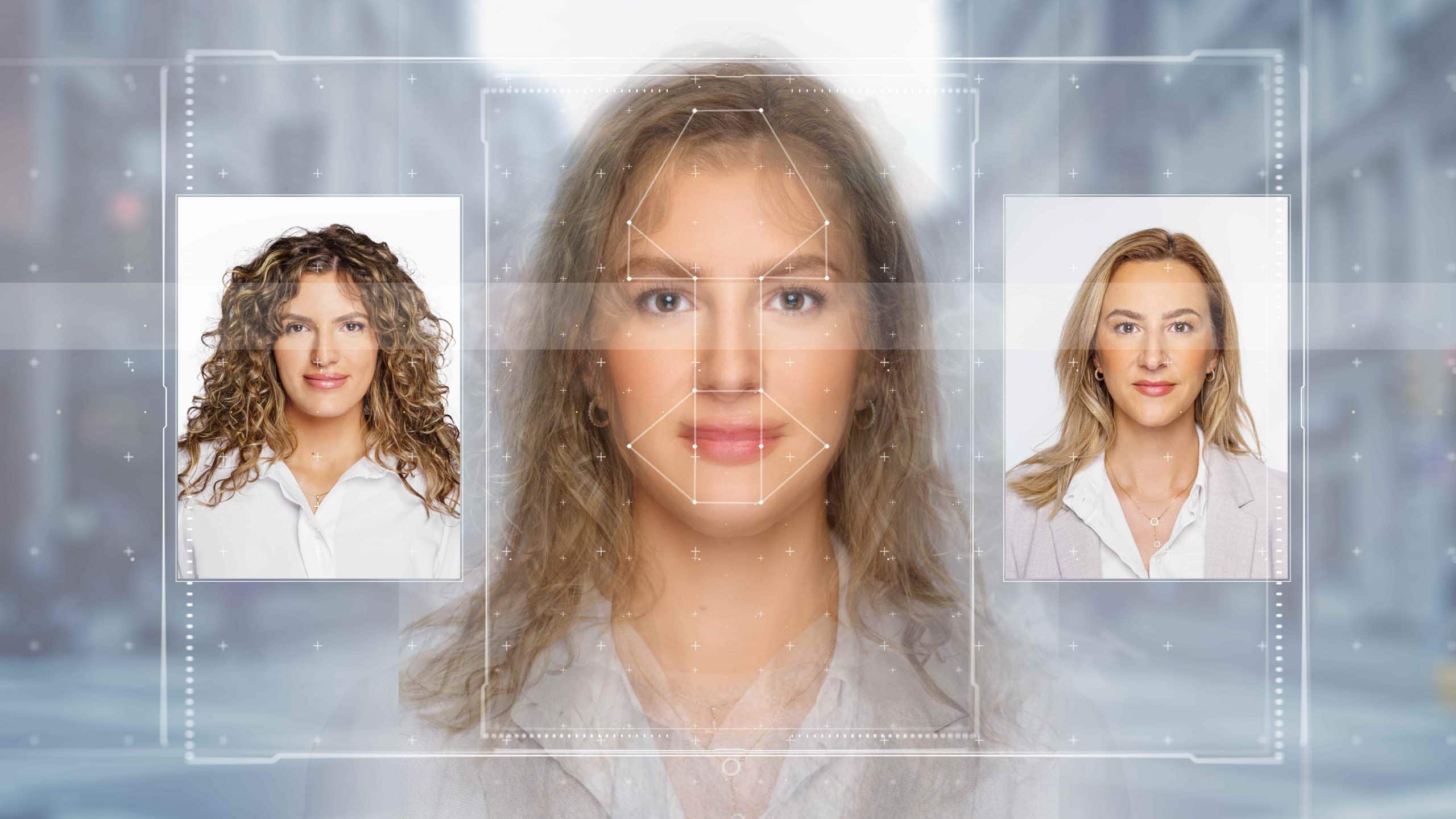

The issue of deepfake AI videos and audio clips being used to influence elections or harm campaigns is still in its early days. As AI continues to advance, these tools will become increasingly effective, eventually moving beyond the uncanny valley.

The best efforts at creating these fakes will probably start to look completely real, making it very hard to tell if a video or audio clip is genuine. It’s also worth mentioning that the Vallas video isn’t the top example of what AI voice generators can do right now.

The best tools with large samples of voices can be extremely convincing. A YouTuber has already created an entire podcast generated entirely with AI replicating top podcaster Joe Rogan and the voices are impressive. They still aren’t perfect and some bits and pieces of audio are clearly AI-generated but parts of the podcasts are quite convincing.

The problem is magnified because you don’t need a very long clip to change the course of an election. A shocking soundbite of about 10 seconds long from a candidate could completely change an election and it likely wouldn’t be too hard to generate and perfect over time with the right audio samples.

What Can Be Done to Stop AI Deepfakes From Ruining Elections?

The potential for AI deepfakes altering elections may seem like it’s still a ways off from being a major problem but it may come sooner than you think and there are few if any protections set to fight it.

There are no laws set out to regulate the use of AIs in political campaigns, though a fake video would likely at least be classified as libel. The problem may have to be dealt with by social media companies first as they are the most likely vectors for deepfake videos.

Most of the largest social media platforms have strict rules about disinformation and impersonation but some don’t have any on the books for AI-generated content.

Facebook and Instagram have designated teams of fact-checkers that debunk “faked, manipulated or transformed audio, video, or photos” and Reddit and YouTube have strict policies against similar content manipulation.

Twitter’s policy is much less clear though it says Tweets with misleading information may be labeled as such and that those harmful to individuals or communities will be deleted. It also adds that content created “through use of artificial intelligence algorithms” will be more scrutinized.

TikTok has the most hardline policy, requiring users to label media generated or modified with AI themselves at threat of the content being removed. This may not be perfect, however, as it can be labeled in multiple places on the post including in the caption and hashtags, which may be easily missed by viewers.

All of these social media problems have had issues dealing with misinformation and disinformation in the past so they may not be all-too-effective at taking down false AI-generated content too. They may also eventually have no way of telling what is AI-generated and what isn’t, making the question of moderation moot.

Related Articles:

Best Tech Stocks to Watch in 2023 – How to Buy Tech Stocks

Threads Challenges Twitter’s Dominance with Explosive Overnight Growth

What's the Best Crypto to Buy Now?

- B2C Listed the Top Rated Cryptocurrencies for 2023

- Get Early Access to Presales & Private Sales

- KYC Verified & Audited, Public Teams

- Most Voted for Tokens on CoinSniper

- Upcoming Listings on Exchanges, NFT Drops