New research claims AI detectors are inaccurate, discriminate against non-native speakers, and fail to identify AI-generated content.

Janelle Shane, an AI researcher and blogger, has revealed the inaccuracies and discriminatory nature of AI detection tools in a recent blog post.

In her post, she highlighted a study from Stanford University that shows that AI detection tools often misidentify AI-generated content as being written by a human. Furthermore, these tools exhibit a strong bias against non-native English-speaking writers.

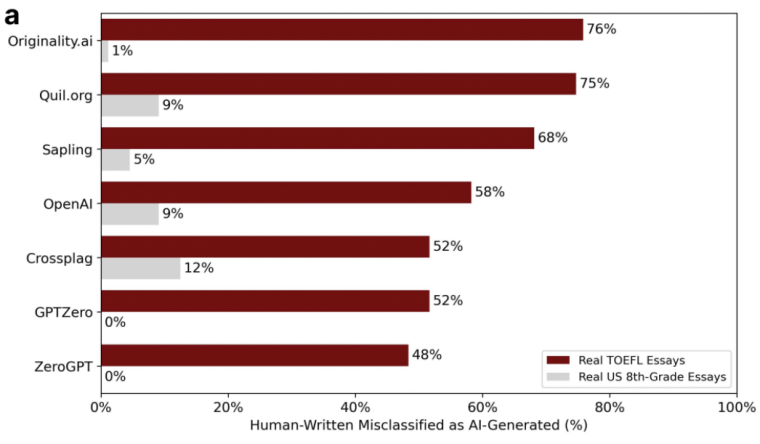

The study found that AI detection products, such as Originality.ai, Quill.org, and Sapling GPT, misclassified writings by non-native English speakers as AI-generated between 48% and 76% of the time.

In contrast, the misclassification rate for native English speakers ranged from 0% to 12%.

She argued that AI detection tools consider writing that is not proficient as AI-generated while labeling AI-generated content as written by human.

“What does this mean?” Shane wrote. “Assuming they know of the existence of GPT detectors, a student who uses AI to write or reword their essay is LESS likely to be flagged as a cheater than a student who never used AI at all.”

Shane’s Experiment Further Demonstrates Flawed Nature of AI Detection Tools

Shane conducted her own experiment to put the AI detection tools to the test.

She entered a portion of her book into two detectors. One detector rated her writing as “very likely to be AI-written,” while the other, which was used in the Stanford study, rated it as “moderately likely” to be AI-written.

Moreover, when Shane prompted ChatGPT to elevate her writing using literary language, the AI detector classified it as “likely written entirely by human.”

Interestingly, when she asked ChatGPT to rewrite the text as a Dr. Seuss poem or in Old English, the detectors rated these passages as “more likely human-written” than the untouched text from her published book.

“Don’t use AI detectors for anything important,” Shane concluded in her blog post.

The new study comes as schools and universities are increasingly using these tools to identify whether students are using AI to write essays.

Back in May, a professor at the University of Texas used ChatGPT to check if his students had plagiarised an essay that he had assigned, or if they were honest and had handed in their original work.

The AI chatbot incorrectly identified the essays submitted by his students as being AI-generated, which led to the professor failing his entire class.

After some investigation, it was revealed that essays were indeed written by the students themselves and that ChatGPT’s assessment was false.

Recognizing his mistake, the professor had to apologize to his students and agreed to offer them a second opportunity to take the exam.

Students Use AI to Write Essays

The surge in popularity of AI detection tools comes as students from all parts of the world have been using AI to write essays and generate content.

According to a recent report by the BBC, a college student from Wales, United Kingdom, who maintained an average GPA, used ChatGPT to write an essay and received the highest grade he ever earned at the school.

The student, studying at Cardiff University in Wales, conducted an experiment by submitting two essays of 2,500 words in January.

One essay was solely the student’s work, resulting in a grade of 2.1, which matched their usual performance. However, the second essay, involving ChatGPT’s assistance, garnered the student their highest grade ever received at the university.

“I didn’t copy everything word for word, but I would prompt it with questions that gave me access to information much quicker than usual,” the student told the media.

He also admitted that he would most likely continue to use ChatGPT for the planning and framing of his essays.

Kids are using AI to write essays and get straight As pic.twitter.com/i0yyXiVEtU

— Peter Yang (@petergyang) September 24, 2022

Likewise, a Vice report has revealed that Reddit user innovate_rye, who is a first-year biochemistry major, and an “A” student, has been using a powerful AI language model to finish most homework assignments.

“I would send a prompt to the AI like, ‘what are five good and bad things about biotech?’ and it would generate an answer that would get me an A,” the student said in an interview.

Universities Ban AI Chatbots Amid Fears of Plagiarism

Many institutions have already taken action against AI chatbots, citing plagiarism, concerns around equality, and allowing some to sidestep coursework.

Back in April, Tokyo-based Sophia University banned students from using ChatGPT and other AI chatbots to write assignments such as essays, reports, and theses.

Prior to that, the New York City Department of Education blocked the chatbot from school networks and devices across the district, citing concerns over plagiarism, as well as the bot’s accuracy.

Seattle Public Schools and Los Angeles Unified School District put similar limits on the use of the bot in December. A number of other major international universities have also imposed a ban on AI tools.