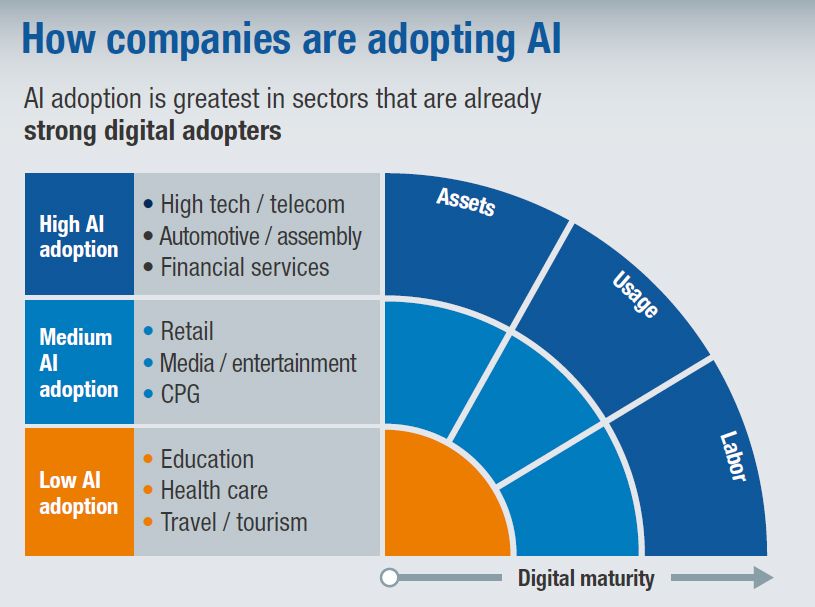

Like many different technologies, Artificial Intelligence (AI) has been widely adopted and implemented in a variety of businesses and everyday life. As a result, it has the potential to solve many business challenges as well as give consumers a new perspective in the digital world.

(Image Source: The AI Journal)

However, as welcoming as its changes are to us, there is a flipside to AI and its advances. Like most technologies, there are concerns for privacy involving customer and vendor data protection. In addition, AI is fueled by algorithms that create new sensitive information that can affect consumers and employees.

This means AI may create personal data as it has the ability to gather and analyse data whilst combining it in quantities from a range of different sources. This practice alone increases the information-gathering capabilities for other technologies to be able to use it for mobile privacy.

On the other hand, machine learning is a subset of AI that can precisely describe how computer algorithms get well versed at solving problems, which helps to leverage large data sets to train themselves. In addition, machine learning refines and improves as it goes along by connecting with new data, learning, adaptation and making deductions. Doing so helps to make any software operate better over time.

How AI and machine learning compromise mobile privacy

AI is an attractive technology as it works wonders for information gathering thanks to its ability to calculate information at scale and speed with automation. The speed at which AI is able to compute is much faster than our ability as humans to analyse. AI is also inherently adapted to utilising large data sets for the purpose of analysing, making it the only way to process big data. It can also perform designated tasks without supervision which makes it so efficient, and these qualities are what allow it to affect privacy.

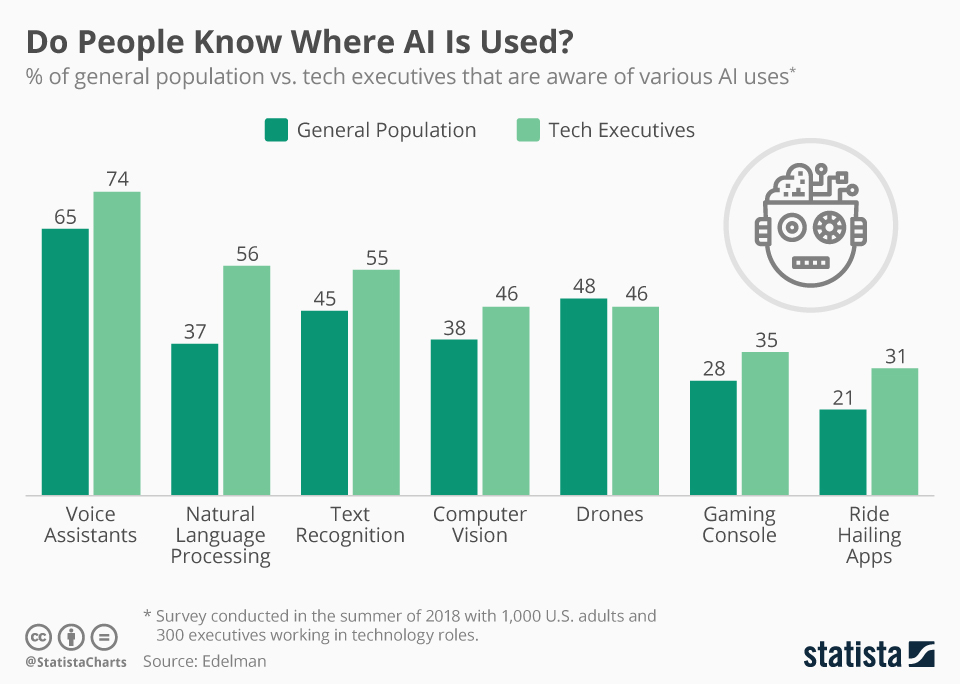

People are unaware of how consumer products have features that make them vulnerable to data exploitation by AI as they do not realise how much data their software devices generate whilst processing and sharing it. The exploitation of this data when using smart devices will only continue into the future as AI becomes increasingly integrated into our everyday lives.

(Image Source: Statista)

AI can also be used for identity tracking. It can be used to monitor individuals across devices. This means even if personal data is anonymised once it combines with a more extensive data set, the AI can d-anonymise it based on interference from other devices.

AI has become increasingly adopted at recognising faces and voices to facial and vocal recognition. Unfortunately, these two features can severely compromise anonymity in the public space. AI can also use sophisticated machine learning from algorithms to interfere or even predict sensitive information from non-sensitive sets of data. However, there is a place for AI and Machine learning modes that can collect data without compromising privacy, especially for the healthcare industry.

Machines to the rescue

With the advancement of technology, a secure VPN the improvement of machine learning models called ‘Federated Learning’ is set to allow organisations with heightened advantage to the medical field, to develop a new way of collecting anonymous data from mobile devices without compromising privacy.

Federated learning is a machine learning method that is used to train algorithms. They are trained across multiple decentralised devices and/or servers that hold data samples. The model does not exchange data with the device connected to it, and so, there is no central dataset or server that stores the sensitive information.

(Image Source: Varonis)

The model aims to train and teach algorithms like deep neural networks on a range of local datasets which are contained in local nodes without exchanging data. This would mean the exchanging of information only happens between the parameters of the nodes. The advantage of this model is its ability to rescue privacy and security risks because it can limit the attack surface only to the device, rather than traditional methods, which include device and cloud connections.

This type of model has enormous potential to be applied in healthcare settings and hospitals, especially as mass amounts of data sets are sensitive and need to be exchanged to help treat conditions and diseases.

Although new technologies have the ability to solve some of the issues brought on by AI and machine learning, in all trueness, they are still relatively new and in their infancy, which means they do have their own shortcomings. For example, although federated learning does strain algorithms across decentralised edge devices without exchanging their data samples, it is still challenging to inspect its working to ensure there is no leak in privacy.

However, the fact that big tech and big data are recognising the issue in privacy means that, although we are in the infancy of our journey, there is still potential for both AI and machine learning to be changed and manipulated to work in a way that is safe for its use long into the future.