Image source: Facebook

Who gets taken in by fake friend requests and spam links on social media? Apparently, enough people to encourage bad actors to keep generating social spam – and giving the social networks themselves cause to devote resources to reducing phishing links, fake reviews, and spam comments in social media. Facebook researchers are conducting a study of social spam to identify clicking behavior patterns; in Twitter’s recent first-quarter earnings report, the social network said it’s successfully tamping down on bots that produce spam.

Nevertheless, social spam persists. It’s been around since the earliest days of social networks: We’ve all experienced our share of unsolicited comments, stranger friend requests, phishing links, fake reviews, and click-baiting. And as online services introduce new features to make connecting and sharing content easier than ever, social spam takes on new shapes and forms from spam account profiles.

Different from traditional email spam, social spam can reach a large audience by nature of the platform and can appear “trustworthy” since it is coming from people in your social network. This kind of spam also has a long lifespan since social media content stays online 24/7 and is rarely removed, if ever.

More than a mere annoyance factor, such attacks severely degrade brand reputation and platform integrity, hindering user growth and even driving away existing users. This causes a stagnant user base and loss in ad revenue, which is what ultimately hits home for social media companies grappling with spam account profiles.

Spam coming to a profile “near” you

We recently observed malicious activities on a well-known social network that illustrate the ever-changing landscape of spam. Today, spam is commonly detected by content-based solutions that analyze messages, e.g., by looking at the word frequency, the validity of the message format, or the presence of known malicious text.

To evade these detection methods, the attackers chose not to post or send messages through traditional communication channels; thus these security solutions have no “content” to analyze. Instead, spammy text was placed within the profile description of fake accounts. This hijacking of an app feature (one that was not meant for messaging) for spam accounts effectively allows attackers to evade vantage points used by existing security solutions.

An example of profile spam (Image source: Hyphenet blog)

More ingenious is how the attackers exploited the location-proximity feature available on mobile apps to distribute spam. While such features enable users to find, view, and interact with others that are nearby, they also allow normal users that are “close” to the fake accounts to be spammed with the profile text. This is exactly what the attackers wanted. Using GPS faker tools, they set the fake accounts’ profile locations to span across tens of major cities to reach a large population of users.

In addition to embedding malicious text in profile descriptions and manipulating GPS locations to distribute spam, an attack can also exhibit an extended incubation period, making detection even more difficult.

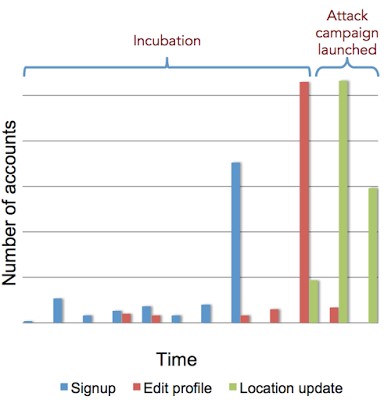

As shown in the timeline below, attackers spent several weeks preparing spam account profiles, including registering fake accounts and editing their profile information in small doses. These “sleeper cell” accounts can circumvent detection for months or years, appearing perfectly benign until right up to attack launch — in this case, spamming “nearby” users. At that point, the damage is already done.

The timeline of a spam attack with an extended incubation period. The attackers embedded spam messages in profile descriptions, and used GPS faker apps to set the fake accounts’ locations to arbitrary cities.

The example above shows how cyber attackers constantly devise new techniques to evade detection, just as online services are adopting new disruptive features to attract more users.

This is a costly problem that desperately needs advanced, predictive security solutions that can detect these hidden accounts masquerading as legitimate users. With evolving attack techniques that are becoming increasingly sophisticated, traditional reactive security solutions are forced to play an endless game of “whack-a-mole” to try to stop them. If it’s faking GPS this time, what’s next?

Ting-Fang Yen, DataVisor’s director of research co-authored this article.