In recent years, some of the greatest innovations in IT have come out of cloud computing. IoT, machine learning, containers and many other cutting edge technologies are being made possible because of the cloud. The most interesting addition to the application development space is serverless architecture and computing. Serverless harnesses the power of the cloud for developers while abstracting away the management of hardware and infrastructure. In this Function as a Service (FaaS) model, developers can focus on what they do best while the cloud provider optimizes their code for delivery.

So what is serverless, and why should developers use it? We will dive into these questions and specifically look at Lambda from AWS in depth, trying to uncover:

- Why you would choose for Lambda

- The languages it supports

- The anatomy of a Lambda function

- How Lambda interacts with other AWS services

- Walking through an example of coding a Lambda function in C# and .NET Core using Visual Studio.

What is Serverless?

I hate to say it, but I’m not a fan of the name serverless. When I first heard the term, I naturally wondered, “Where are the servers? What have you done with my servers?” I assure you that we aren’t pushing code to literal clouds here, and no one raided our server racks. There are servers. You just aren’t managing them. The cloud provider completely shoulders this responsibility.

Why Serverless?

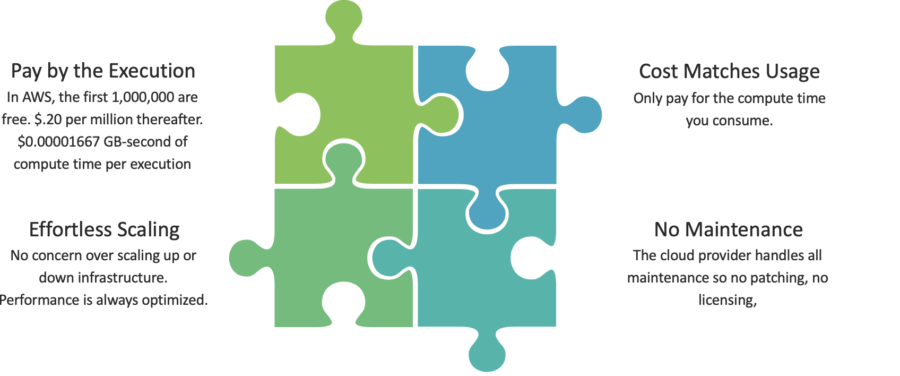

Once you surrender provisioning servers, you can devote your entire focus on developing applications. It is the cloud provider’s responsibility to ensure the performance of your serverless functions is optimized for the load thrust upon it at any given second. There is no fleet of virtual machines to spin up in preparation for Black Friday. No boxes sitting idle at 4:00 am on a random Tuesday night. You only pay for what you consume.

This cost-matches-usage model is accomplished through the cloud provider’s pay-by-the-execution approach. The cost proposition is very similar for both Amazon’s AWS Lambda and Microsoft’s Azure Functions. Each month, the first million executions are free, and you only pay twenty cents for each million thereafter. The GB-second of compute time per execution is $.00001667. Depending on your business model, this can translate into huge savings. Financial Engines trimmed their hard costs by 90 percent in their switch to serverless.

Since you aren’t managing servers, you aren’t plagued by the headaches that come along with server maintenance. Nothing needs to be patched, and there are no software licenses to keep up with.

Flavors of Serverless

Pretty much every major cloud provider has their own version of serverless. Let’s focus on the offerings of the big three:

- AWS Lambda

- Azure Functions

- Google Functions

AWS and Azure are waging a fierce battle around all things cloud. It’s not uncommon to see AWS come out with a new service or feature only to have Azure mirror that functionality in their next release. The differences between their serverless offerings are very slim at this point. Google Functions really isn’t on par with these two. Thus far, Google has lagged badly when it comes to updates to their serverless product, at times taking as much as a year between feature releases. Google Functions is also severely limited by its language support which includes only NodeJS and Python.

Azure Functions does have a broader official language support as they cover the breadth of languages AWS Lambda supports plus PHP, F#, Powershell and Typescript. Lambda does have languages like Rust, Elixir, Scala, and Haskell in the experimental phase, but these aren’t officially supported at this point.

Regardless of the flavor of serverless you choose, it should tie into the cloud services it needs to interact with. If you operate within the Azure cloud, it makes the most sense to go with Azure Functions, and the same applies to AWS and Lambda. It is certainly possible to run cross platform, but you’ll have to do a little extra work to weave the two together. One example might be if you wanted to create an Alexa Skill using Azure Functions. It’s completely doable but isn’t as seamless as tying Alexa directly into Lambda.

Why AWS Lambda?

If you haven’t already tied your horse to a cloud provider, what makes AWS Lambda the superior choice when it comes to serverless? Four things really stand out in Lambda’s favor:

- AWS created serverless computing with the release of Lambda

- 70 percent of the serverless user base runs AWS Lambda

- The AWS cloud is almost double the size of Azure

- AWS innovates and leads on functionality in serverless

It’s hard to ignore the advantage of being the first mover in a technology space. It’s even harder to ignore a company that operated by themselves for two years. That was the situation Amazon found itself in November 2014 when it announced its new Lambda service at re:Invent. Lambda was built as the engine behind Amazon’s popular voice assistant Alexa. It was such a success that they decided to package it up as a service and release it to the AWS community.

Due to this significant head start, AWS has wrapped up 70 percent of the serverless user base. This number is further supported by the fact that Amazon’s cloud is twice the size of Microsoft’s — 32 versus 17 percent. AWS has consistently led on functionality with serverless. Since product development was driven by internal stakeholders providing the initial demand and use cases, it is little wonder why Lambda is such a compelling choice when it comes to serverless.

Languages supported by Lambda

AWS Lambda supports six languages presently. These include:

- Java (June 2015)

- Python (October 2015)

- NodeJS (April 2016)

- C# with .NET Core (December 2016)

- Go (January 2018)

- Ruby (November 2018)

As you can see by the release dates, Amazon has been steadily tacking on language support since Lambda’s release. They also have experimental support for additional languages — Haskell, Elixir, Rust, F# (through .NET Core), Scala, Kotlin, Clojure, Groovy (through the Java Virtual Machine) — but they are experimental for a reason.

When looking at performance metrics, we do see some languages outpacing others when viewing cold starts. NodeJS and Python are the fastest cold start languages due to their lightweight runtimes and interpreters. C# is in the middle of the pack with Java being the worst. Both have bulkier runtimes that are harder to package up. This can be significant when analyzing the cost model of Lambda. Longer execution times and heftier memory footprints translate into higher usage costs.

There is no noticeable difference when analyzing warm starts between the languages. They tend to keep their space in memory for around fifteen to twenty minutes before its released.

Composition of a Lambda Function

There are three essential elements to a Lambda function:

- Handler

- Runtime

- Trigger

The runtime relates to what coding language we’ve developed the function in. We outlined these options above. The handler is the code that gets executed when the function is invoked. Its signature will look something like this:

exports.myLambdaFunction(Event, context, callback) => {}

The event is a value that gets passed into the function. This could be a string, an object or various other variables we would need to work with. The context is internal AWS information that we might want to pass back. There are several contexts that could be returned, but a few examples include a request ID, an expiration timeout or log information. The callback is a standard async Javascript callback handler.

Finally, we have the trigger. This is how the function gets invoked. There are a number of ways to trigger a Lambda function. You could trigger a function from another AWS service. For example, an update to a Dynamo DB table could trigger Lambda code. For those familiar with RESTful web services, a common use case would be setting up an API Gateway to trigger the Lambda function from an HTTP request. Maybe you have a cron job that runs on an interval that needs to invoke that Lambda function.

Using Lambda with Other Services

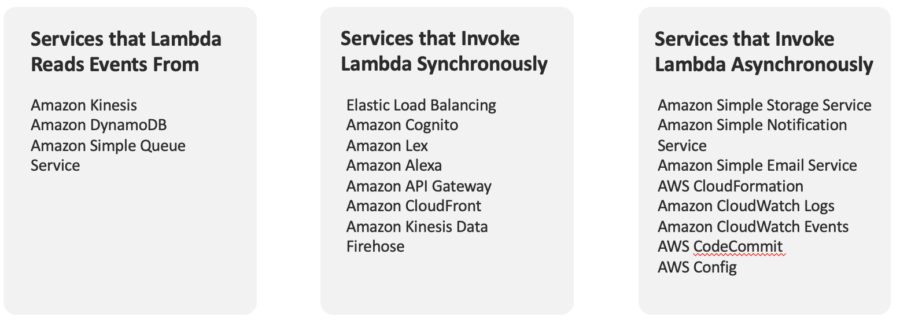

There are lots of AWS services that interact with Lambda. Here are nineteen that Lambda reads events from as well as services that invoke Lambda synchronously or asynchronously.

Setting Up a Lambda Function with .NET Core & C# on Visual Studio

Since I primarily use C# for my server side programming, I will walk through building a Lambda function in Visual Studio using .NET Core and C#. You never have to leave Visual Studio to get your Lambda function up and running on AWS. To make this happen, you will need Visual Studio 2017 or 2019 so you can download the AWS toolkit.

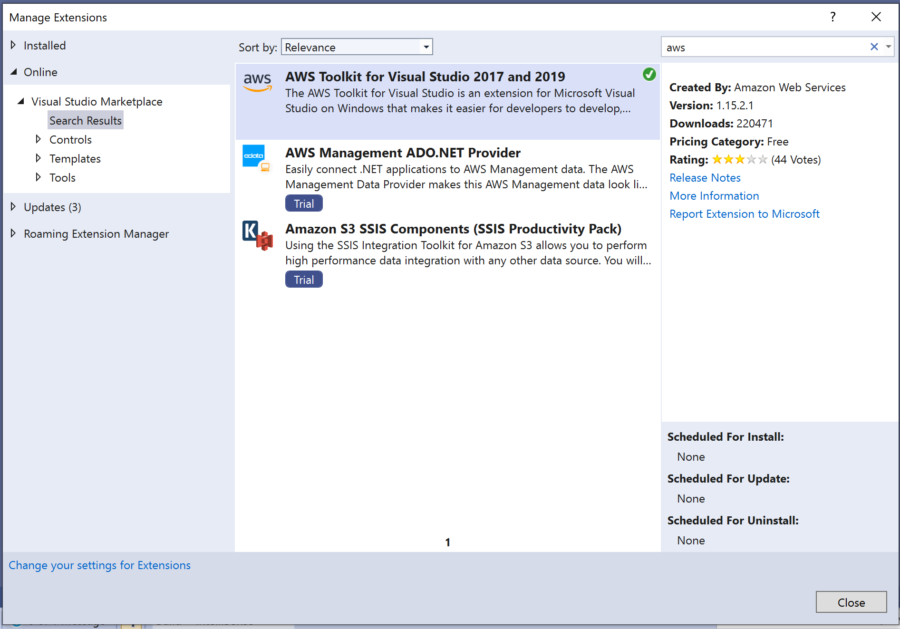

Once you have fired up Visual Studio, go to Extensions > Manage Extensions which will bring up the window below. Select Online in the left menu, and do a search for aws. Install the AWS Toolkit for Visual Studio 2017 and 2019. Visual Studio will prompt you to reboot to kick off the toolkit install process.

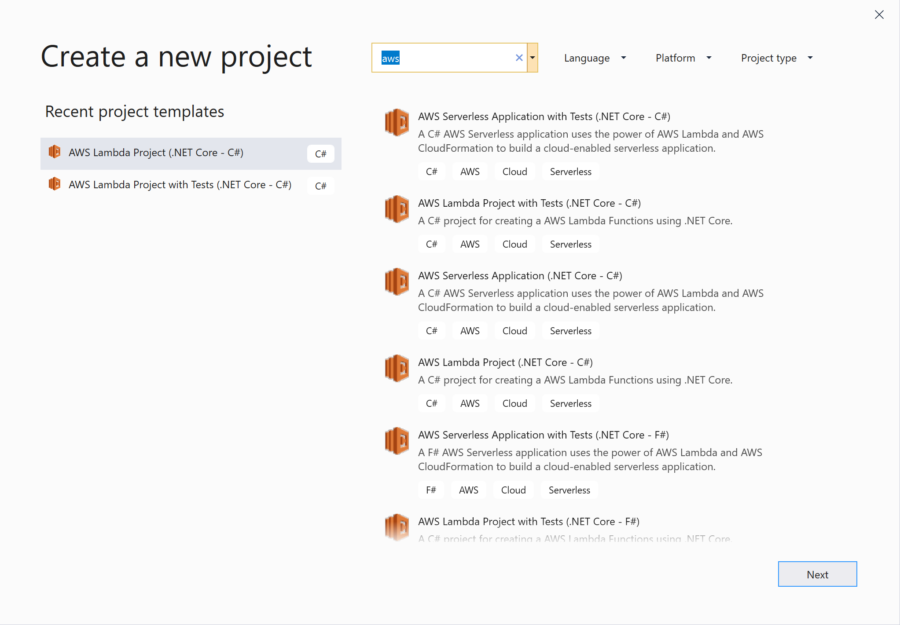

After the AWS Toolkit has been installed, create a new project (File > New > Project) and type in aws in the search bar to bring up all the AWS project templates that were made available from the toolkit. For this example, I will choose AWS Lambda Project with Tests (.NET Core – C#), but you can choose the option that best suits your project needs.

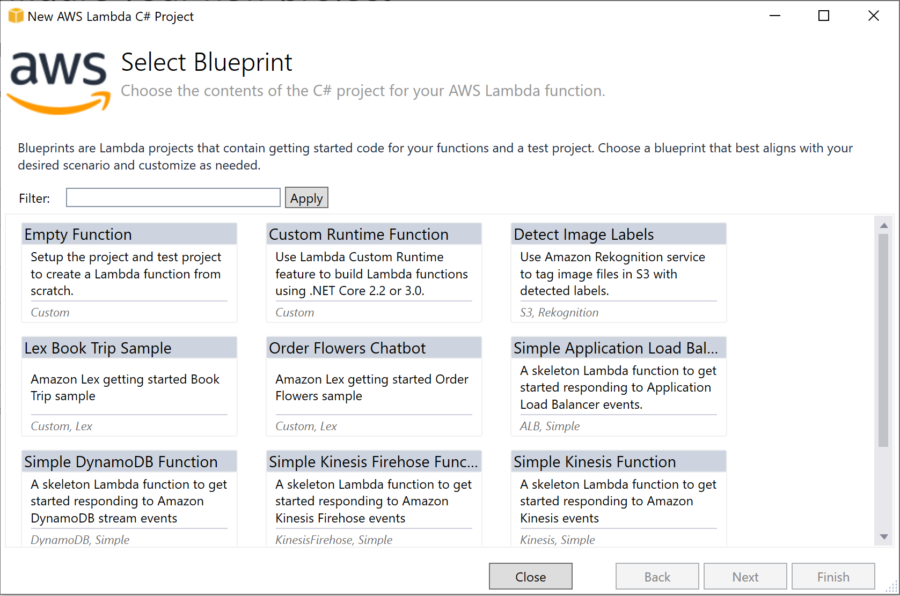

Upon hitting next, it will ask you to configure your project by setting the project name, solution name and where it will be located. After pressing the create button, you will be prompted to select a blueprint. This is coming in from AWS and mirrors the setup within the Lambda service console. AWS gives you a set of standard templates you can lean on to see how a Dynamo DB function or a chatbot might be setup. For our purposes, we will just select empty function.

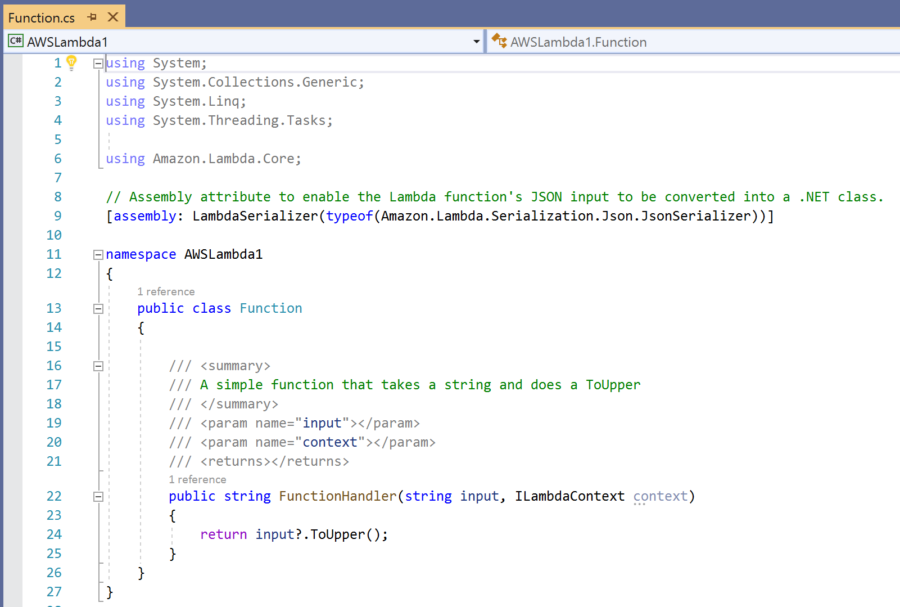

Once you select finish, you will see Visual Studio actively creating your AWS Lambda project. It produces three files within the main project including:

- Readme.md

- Function.cs

- aws-lambda-tools-defaults.json

The Readme.md file is a short read that can be helpful if you are getting up and running with Lambda functions for the first time. The aws-lambda-tools-default.json is your configuration file. It stores values like the function memory size, timeout, AWS region and the .NET Core runtime. You also have the option to set some of these values when you push your code up to Amazon. Finally, the Function.cs houses your Lambda code that will be invoked. In the empty function project that we’ve setup, it is accepting a string, converting it to upper case and returning that value.

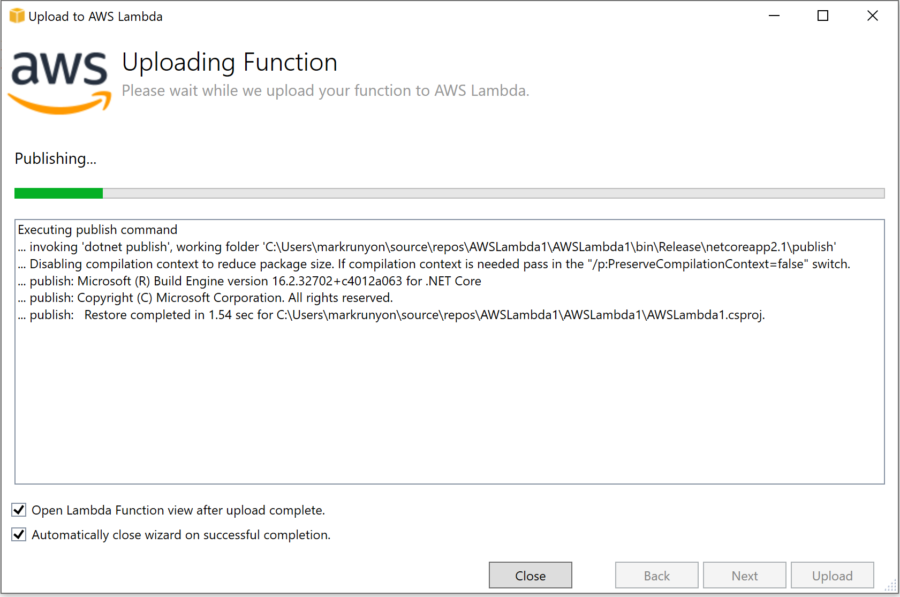

When we have the Lambda function coded how we want it, we can get it up to AWS by right clicking on our project and selecting Publish to AWS Lambda. Once the Upload Lambda Function window comes up, we have to click on the user button to establish the link to our AWS account. To do this, we enter our access key ID, secret access key and account number. Once complete, we now have that profile as an option to select for upload. Similar to the config file we just looked at, there are several options available between this screen and the next including things like setting the AWS region, the language runtime, environmental variables and the IAM role the Lambda function will execute under. Once you have defined all these values for your project, we click upload and watch our Lambda files get pushed up to AWS.

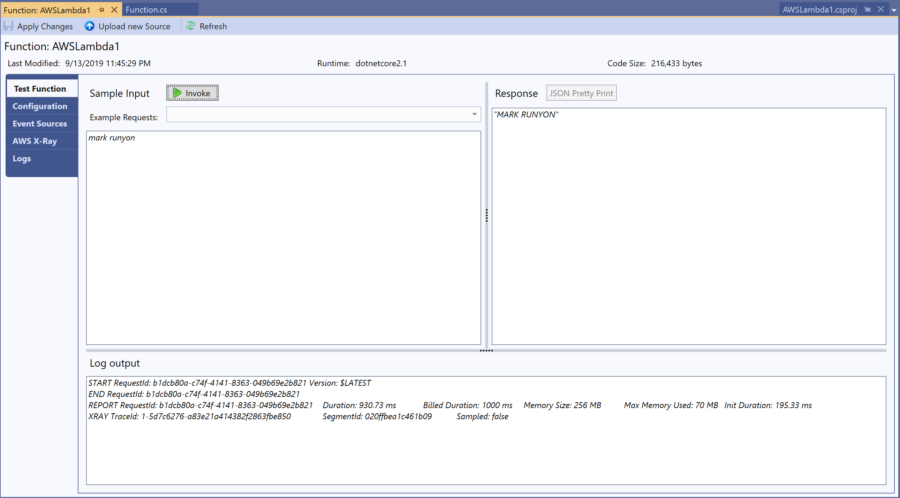

Upon completion of our upload, Visual Studio will pull up a Lambda testing screen where we can submit a test value to our function to verify it is working. In the case of our upper-case conversion function, we can see that submitting my name as a string does, in fact, convert it to all caps.

Visual Studio allows you to publish projects within the .NET Core framework (C# and F#) as well as NodeJS. You could also do this directly through the AWS Lambda console. It even has an IDE where you could code directly in your browser. I personally find Visual Studio to be a better option when building Lambda functions in .NET Core, but other options are available.

While we’ve focused on AWS Lambda, the real star is serverless. Serverless fulfills the potential of application development in the cloud. It leverages the seamless ability to scale and ties that into a cost model that is based solely on usage. It also easily interacts with other cloud services as well as the traditional RESTful execution model. Serverless truly is the next step in application development.