Google Analytics shows 104 conversions. Your CRM shows 123 new leads. Heap reports 97. And so on.

It’s easy to get frustrated by data discrepancies. Which source do you trust? How much variance is okay? (Dan McGaw suggests 5%.)

For most companies, Google Analytics is a—often the—primary source of analytics data. Getting its numbers aligned with other tools in your martech stack keeps results credible and blood pressure manageable.

This post covers discrepancies between Google Analytics and your:

- CRM, CMS, accounting, or other back-end software.

- A/B testing tool, personalization tool, or some other analytics tool.

- Google Ads.

We’ll show you what causes those discrepancies with Google Analytics data and how to resolve (or, at least, minimize) them.

But before you diagnose a “discrepancy”…

Before you start comparing different data sources and looking for discrepancies:

1. You need to know how each tool operates. That includes how each one defines and measures sessions, users, conversions, etc.

Some tools from the same company, like Google Analytics and Google Optimize, have reporting discrepancies. (Google Analytics and Google Ads also have differences.)

Not surprisingly, differences are greater in tools from different companies. For example, “conversion rate” in Google Analytics is conversions/sessions, while in VWO and Optimizely it’s conversions/unique visitors.

Drilling down further, Google Analytics and VWO limit a conversion to once per session or visitor, while Optimizely allows you to count every conversion.

2. The comparison date range should be long enough to include a decent amount of data, and it shouldn’t be from too far in the past (because something might have changed in the setup).

In general, the previous month or last 30 days is a safe pick.

3. Don’t choose metrics that are similar but not the same. There’s not much point in comparing sessions to users or unique conversions per user to total conversions, etc.

4. When identifying a discrepancy, get as granular as possible. Knowing that you have a 15% difference in overall transactions doesn’t tell you much—knowing that 100% of PayPal transactions are missing is much better.

And before you try to fix one…

After figuring out what could be broken, go through the funnel yourself and make sure that certain events are indeed missing or broken or that something else is off.

In most cases, fixing discrepancies requires some work by developers, analytics implementation specialists, or other experts. Don’t start editing, adding, or removing tags or snippets without proper knowledge of how those tools work.

Otherwise, it’s easy to turn a small discrepancy into a massive issue—one that torches year-over-year data comparisons and just about all of your quarterly (even annual) metrics.

1. Data discrepancies between Google Analytics and a CRM, CMS, accounting, or other back-end software

Issue: Google Analytics conversions are duplicated.

Why does it happen?

A confirmation page is reloaded, bookmarked, or simply loads multiple times.

How to fix it

Confirmation pages should be unique and viewable only once. You can achieve this by including a unique ID within the URL.

So, instead of a generic:

- https://shop.com/checkout/thank-you

Every transaction would include an ID within the URL:

- https://shop.com/checkout/9873230974/thank-you

If you reload the page or access it from a bookmark, you should be redirected automatically to a different but relevant page, like an order details page:

- https://shop.com/checkout/9873230974/order-details

This makes sense for another reason, too—you don’t want a recent purchaser to reload a page that makes it seem as though a repeat payment went through.

Ensure confirmation pages are set to “noindex.” “Thank you pages” for lead forms are often generic—and, by default, set to be indexed by search engines.

This can cause those pages to appear in search results for brand queries (even showing up as sitelinks beneath the homepage).

In most cases, that won’t happen, but having those pages indexed is a liability—a Google Analytics Goal set up as a destination URL will register a conversion for anyone visiting that page directly from search.

Issue: Google Analytics includes test transactions and other testing traffic.

Why does it happen?

QA. Sometimes companies have their QA specialist complete the funnel every once in a while to make sure everything works correctly.

Or a bot might be set up to check the funnel regularly. Or someone might want to see the thank you page and, to get to it, completes a test transaction.

Payment process changes. When something changes with payment gateways, companies usually test the new setup with some dummy transactions that they later cancel/refund.

In all these cases, you don’t want those transactions to show up in Google Analytics.

How to fix it

The solutions are straightforward. While one or the other may be enough, including both is the safest path:

- Test conversion flows on a staging URL.

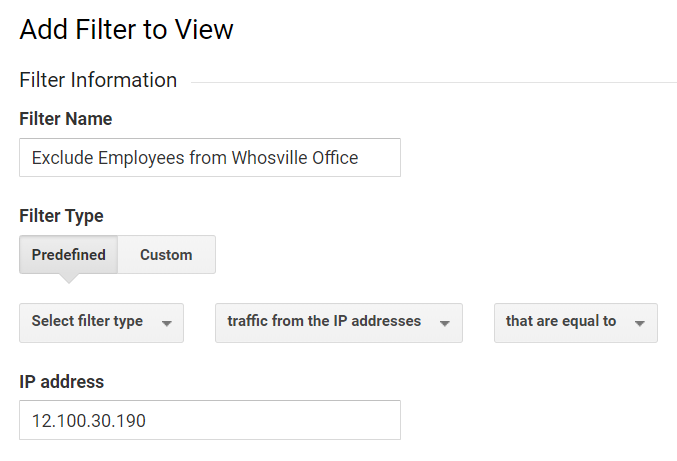

- Exclude office IP addresses using Google Analytics filters.

Issue: Google Analytics is missing some conversions.

Why does it happen?

Your setup may be broken. For example, Events or pageviews are sent too late because several scripts fire before Google Analytics, leading a user to close their browser window before the Google Analytics script runs.

Your setup changes over time. For example, a conversion page is replaced by on-page confirmation of a form submission, but Google Analytics Goals aren’t updated from destination URLs to Events.

Different conversion opportunities trigger the same Goal. Google Analytics tracks a maximum of one conversion per Goal per user per session.

How to fix it

Test your setup when creating new conversion opportunities and review it periodically. A light health check every 3–6 months makes sense.

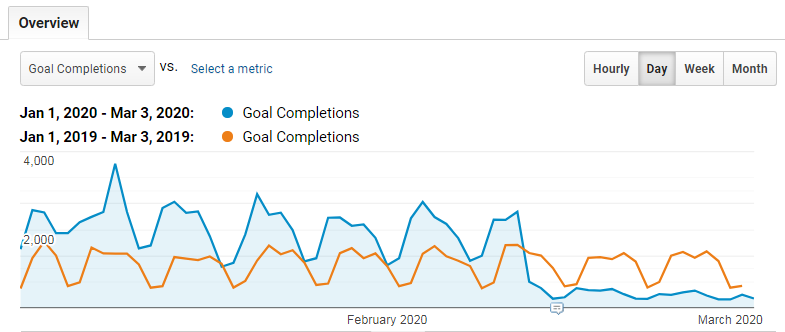

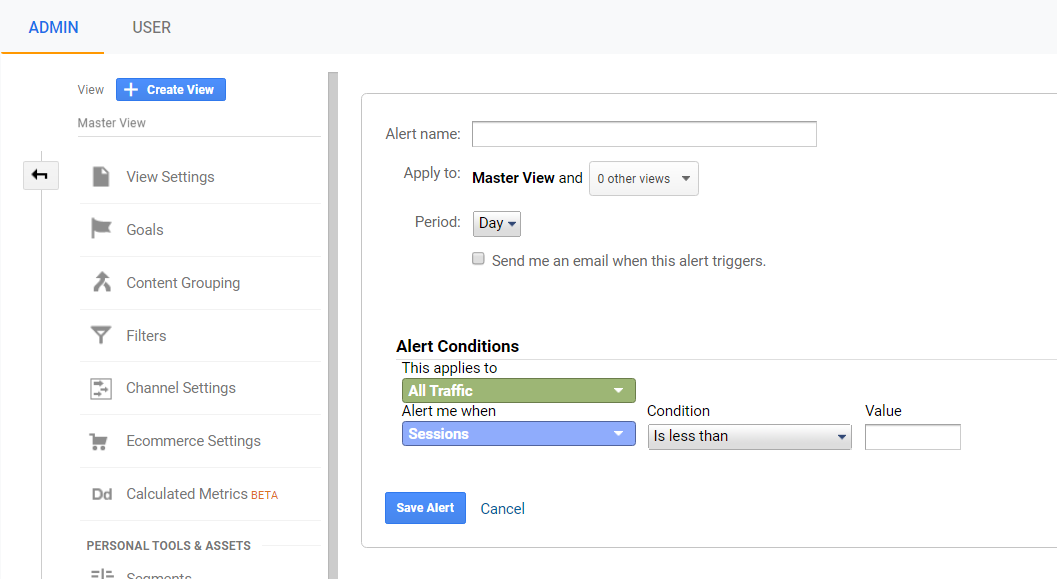

You can also set up custom alerts in Google Analytics to spot a dramatic change in conversions. (Use with discretion: It’s easy to flood your inbox with false positives.)

If there can be more than one conversion per session, use Google Analytics Events instead of confirmation pages.

Issue: Google Analytics isn’t tracking some payment gateways.

Why does it happen?

Not all payment processors get tracked. Credit card purchases, for example, may be included but not PayPal or Amazon.

(Cross-domain tracking between your site and payment providers can also disrupt the source information for transactions, although it will still report transactions.)

How to fix it

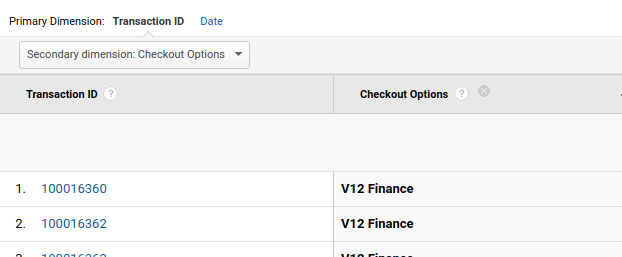

Store payment gateway information in Google Analytics. You can store the information with a custom dimension. Then, check which payment type has issues by comparing against back-end numbers.

As Jamie Shields details, You can also adjust Google Analytics’ settings to capture that data (so long as you’ve already enabled Enhanced Ecommerce).

2. Data discrepancies between Google Analytics and an A/B testing tool, personalization tool, or some other analytics tool.

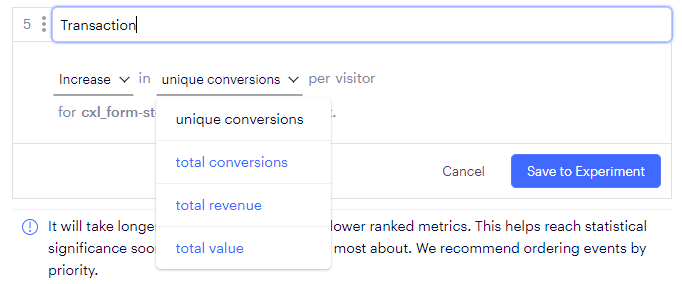

Every tool has its own methods for various metrics. For example, while Google Analytics tracks one conversion per Goal per user per session, other tools count every conversion.

In some cases, you can change this rule. When possible, make sure you have a similar setup in all tools you plan on comparing. If a site has different payment gateways, make sure all (e.g., credit card, PayPal, Apple Pay, etc.) get tracked the same way.

Before starting a comparison, make sure you understand how each tool works and the definitions for their terminology (session, visit, visitor, user, etc.). If possible, try replicating rulesets from other tools in Google Analytics.

To replicate the user-level setup seen in most testing tools in Google Analytics, you have to create user-scoped (not session-scoped) custom segments—one for all visitors and one for users who had at least one conversion.

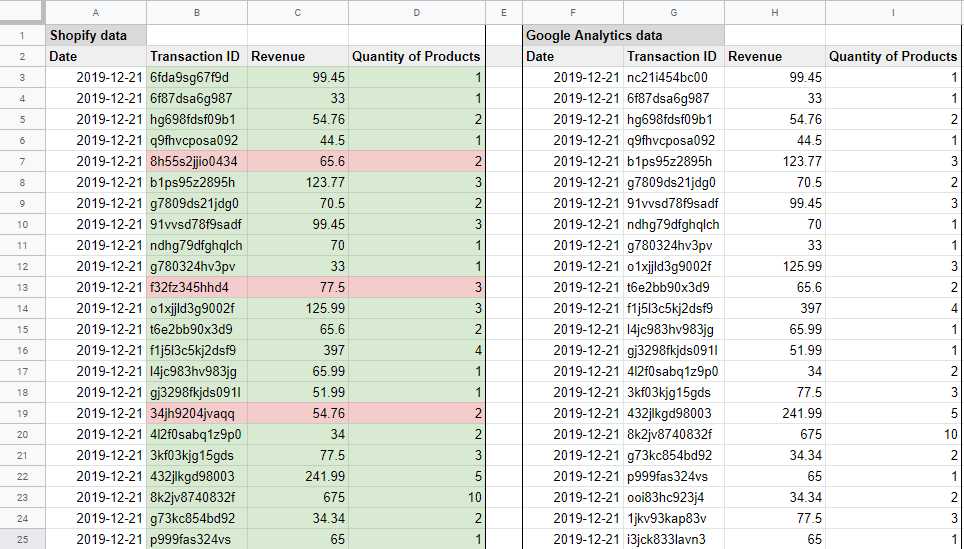

You can do this with custom reports, custom segments, etc., to see if you can get matching (or at least closer) numbers. If a tool provides hit-, conversion-, or transaction-level data, compare the unique IDs to detect which data is missing.

Here are some common scenarios in which the varying logic can get in the way of accurate results.

Issue: The custom segment setup in Google Analytics is wrong.

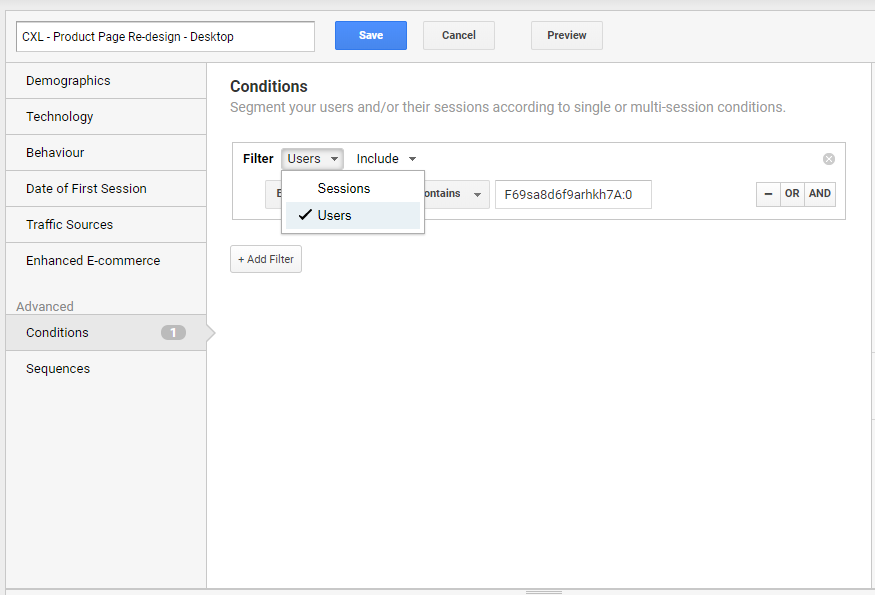

When analyzing A/B testing data in Google Analytics, you usually start by building a custom segment for each of your variants. This can involve default experiment/variant dimensions, custom dimensions, and custom events.

Most testing tools provide guidelines for building Google Analytics segments in the part of their documentation that covers their Google Analytics integration. Here’s an example from VWO:

You likely will need to decide whether to build session- or user-based segments. (Most testing tools default to user-based segments; Google Analytics defaults to session-based ones.)

Issue: Custom dimension values are overwritten by another experiment.

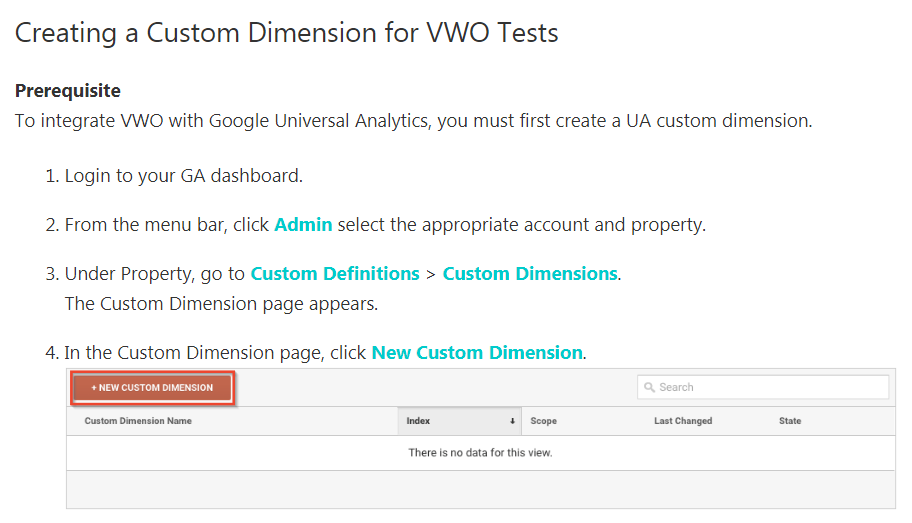

Many testing and personalization tools rely on custom dimensions for their integrations. This means that the user has to select a custom dimension every time they set up a new test.

Since those dimensions are on either a session- or user-level scope, multiple experiments can send data into the same dimension, and the latest value will overwrite all previous values.

Keep track of your dimensions and make sure no simultaneous experiments have the same dimension. In testing tools, name your experiments something like “Category Page – Filter Area Focus – Desktop – GA12.”

GA12 refers to the custom dimension 12 in Google Analytics and a relevant setting in the testing tool for that experiment. This way, you can check that no other live experiment has GA12 in its name.

Another solution is to use custom Events instead of dimensions, but that usually requires some custom development work. (At CXL Agency, we set this up for most clients.)

Issue: There’s a difference in numbers between Google Analytics and Google Optimize.

Even though both tools should rely on the same dataset, discrepancies can still happen.

All metrics you see in Optimize are first processed by Analytics, then pushed to Optimize. The push to Optimize can take up to 12 hours. As a result, you will generally see more Experiment Sessions in Analytics than in Optimize.

Additionally, when you end an experiment, data accrued between the last push to Optimize and the end of the experiment will not be pushed to Optimize (but will be available in Analytics).

Conversion rates are also calculated differently:

Optimize is calculating the actual conversion rate for a variant. This value may not yet be represented by a variant’s observed conversion rate, especially early-on in the experiment. You can expect the future conversion rate of a variant to fall into the range you see in Optimize 95 percent of the time.

Conversely, the conversion rate metrics you see for the same experiment in the Analytics Content Experiment report are an empirical calculation of Conversions / Experiment Sessions. These two conversion rate values (the modeled conversion rate range in Optimize and the observed conversion rate in Analytics) are expected to be different, and we recommend that you use the values in Optimize for your analysis.

3. Data discrepancies between Google Ads and Analytics

Google offers official documentation to detail the discrepancies between Google Ads and Google Analytics:

- Comparing long date ranges may include periods during which your accounts were not linked.

- Linking multiple Google Ads accounts to the same Analytics view complicates the information in your reports.

- Filters may remove some of the data from your Analytics reports. Check that there are no filters editing your campaign final URLs.

- Google Ads data is imported into Analytics at the time you view your report, so data is current as of the most recent hour.

Conclusion

Minor data discrepancies are a reality—even a “perfect” setup won’t avoid some variance among reporting tools. But, in almost every case, we see plenty of discrepancies that can—and should—be corrected.

Before you get started, make sure you have a clear understanding of how each tool processes data. Some solutions, like excluding your internal IP address, take just a couple minutes to implement.