In its most significant update since the release of GPT-4, OpenAI is adding voice and image processing capabilities to ChatGPT. Soon ChatGPT Plus and Enterprise subscribers will be able to show the AI model images and even have entire voice conversations.

The features will be rolling out over the next 2 weeks for Plus and Enterprise subscribers and soon after for everyone else. Voice features will be limited to the iOS and Android ChatGPT app but image processing will be rolled out on all platforms from the beginning.

While the addition of image processing is likely a major improvement for some use cases of ChatGPT, the new voice chat features are an even more exciting addition. You will soon be able to have a full spoken conversation with one of the best AI models on the planet.

You will be able to record audio prompts at the press of a button (or enter text prompts) and ChatGPT will speak back using one of a handful of unique voices.

Some onlookers are skeptical, expecting ChatGPT’s voice functionality to sound robotic and unnatural. The critics may prove to be wrong, judging by the few voice snippets that OpenAI provided in its blog post announcing the features.

The post included a clip of someone asking ChatGPT to read them a bedtime story and the AI model responded within seconds with an almost entirely realistic voice. Those paying close attention can tell that it’s not a real person due to oddities like small pronunciation errors but it sounds remarkably good.

The blog post included samples of 5 different AI voices (including both male and female) reading 5 different text samples and they all sound great. OpenAI mentioned that it partnered with established voice actors to craft these voices using Whisper, its very own speech recognition model.

It’s important to note that the samples may have been cherry-picked and that the final feature won’t usually sound as good. ChatGPT Plus and Enterprise subscribers will soon be able to test it themselves.

OpenAI also announced that it partnered with the king of music streaming, Spotify, to build a new feature that will translate podcasts into other languages using the same voice technology. It is currently only available for a few large podcasts from podcasters like Dax Shepard, Lex Fridman, and Monica Padman but more will be added soon.

OpenAI and Spotify likely decided to only make this feature available to large podcasts to avoid giving voices to potentially harmful content.

OpenAI Looks to Impress With Image Processing Capabilities

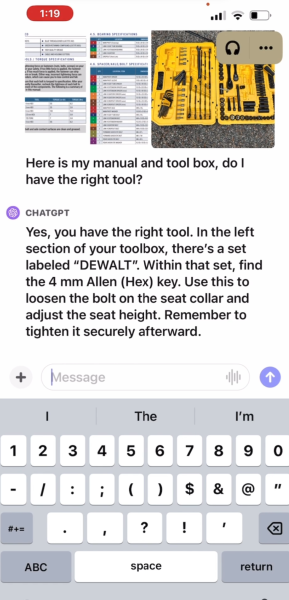

One pioneering new feature apparently wasn’t enough for OpenAI. ChatGPT’s new image processing capabilities look quite impressive too. The example that OpenAI gave in its blog post showed someone using the app to teach him how to lower his bicycle seat.

The sample video showed that users will be able to snap a quick picture and draw on it to tell the model what to focus on. Once they are happy with the picture they can add a text prompt to ask the model something about the image. You can also add multiple images to a message.

The sample video showed the user going back and forth with ChatGPT, sending images of parts of the bike to give the model enough info to help him. With just a few images of the bike, the manual, and the user’s toolbox it was able to walk them through the process, even including telling them what tool they needed to use.

Of course, this particular session was picked specifically for the blog post announcement and ChatGPT may not be as helpful for many prompts.

Will These New Features Be Safe?

Every time a pioneering tech company like OpenAI releases potentially world-changing products or features, we have to ask: is this safe? OpenAI clearly considered these risks closely and tailored the implementation of its impressive voice tech to avoid them for the most part.

Releasing a public version of the base voice model that can generate a voice from a few samples could be quite dangerous. Bad actors may try to use it to impersonate public figures, perhaps combining the voice with a matching ‘deep fake’ video.

OpenAI avoided this completely by restricting the feature to a few set voices that couldn’t be used to impersonate public figures.

The AI firm clearly made sure that its image processing feature was as safe as possible too. It claimed that it tested the model with red teamers (also known as white hat hackers), cybersecurity pros that try to attack the model to find its vulnerabilities, as well as many alpha testers.

Interestingly, the blog post mentioned that it restricted the model from analyzing and making direct statements about people in images because “ChatGPT is not always accurate and these systems should respect individuals’ privacy.” Perhaps it was inadvertently insulting people in the images it received.

The quality of the voice and image processing features as well as their potential flaws will soon be explored by millions of curious AI enthusiasts. If they are as powerful as the blog post makes them out to be, they may have a tremendous impact on AI and the world in general.