Every new technology brings great benefits but also the risk of misuse, and artificial intelligence (AI) is no different. Meta has postponed the launch of its new generative AI model, which can create speech from text, because it could be used for scams.

Unveiling the AI Voicebox From Meta

In a recent development, Meta has unveiled Voicebox, a generative AI model trained to transform text input into speech output. According to the tech giant, Voicebox has the ability to “generalize to speech-generation tasks it was not specifically trained to accomplish with state-of-the-art performance.”

The model has been trained on more than 50,000 hours of recorded speech and transcripts from public-domain audiobooks in English, French, Spanish, German, Polish, and Portuguese. This gives it the ability to produce audio in the 6 languages as well as transfer speech between the languages.

Speaking of the innovation, the tech company said:

“This capability could be used in the future to help people communicate in a natural, authentic way even if they don’t speak the same languages.”

Thanks to the wide and diverse dataset, Meta’s researchers say that the algorithm can produce speech that sounds more conversational regardless of the languages that each party speaks thanks to this wide data set. Meta added:

“Our results show that speech recognition models trained on Voicebox-generated synthetic speech perform almost as well as models trained on real speech.”

Aside from generating speech, Voicebox also has the ability to edit audio files. With this, users can remove noise from prerecorded audio. They can also change parts of the audio and fill them in with other words and still have the recording sounding very much like the person without recording again.

Meta Shows Off The Other Side of AI

Despite being endowed with all these capabilities, Meta stated that neither the model nor its source code would be made available to the public just yet.

“There are many exciting use cases for generative speech models, but because of the potential risks of misuse, we are not making the Voicebox model or code publicly available at this time,” the company stated.

“While we believe it is important to be open with the AI community and to share our research to advance the state of the art in AI, it’s also necessary to strike the right balance between openness with responsibility,” the company added stressing the importance of transparency and responsibility in the development of technology.

Meta acknowledged that the discovery had the ability to be used for unintended harm, in this case, deep fake. A deep fake is a video or audio recording that replaces someone’s face or voice with that of someone else, in a way that appears real.

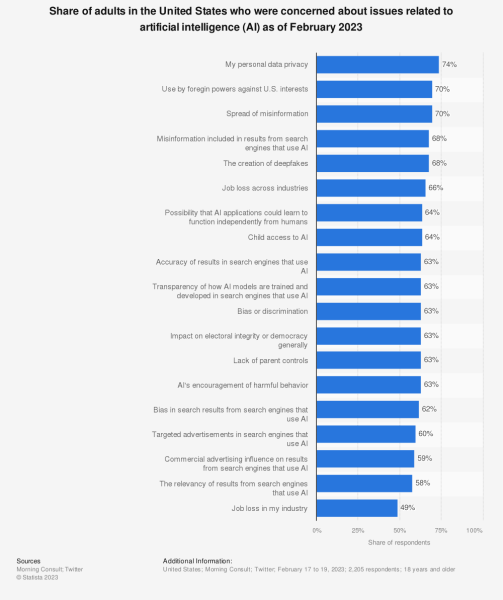

With the advancements that have been made in AI so far, data by Statista shows that at least 68% of adults in the US fear that the technology could be manipulated to generate deepfakes.

These fears are not misplaced since there already have been reports even on a global level that con artists are using powerful AI tools to produce deep-fake voice recordings and videos of people. The fraudsters then use these videos to trick the people’s family and friends into sending money.

Such a case was reported by CNN in April where con artists impersonated the 15-year-old daughter of a lady from Arizona using AI technology to demand a ransom for the teen’s release. In March, a photo created by AI showing former President Donald Trump being arrested became widely shared on social media.

During the Regional Anti-Scam Conference 2023 held at the Police Cantonment Complex, Singapore’s Minister of State for Home Affairs Sun Xueling stated that scammers have been utilizing deep fake technology to clone authorities.

“We have already seen overseas examples of bad actors making use of deepfake technology to create convincing clones – whether voice or videos of public figures – to spread disinformation.”

Such uses become very dangerous because the public can no longer trust authorities and those charged to keep them safe because they can never be sure whether they are real or fake.

Unfortunately, the continued development in AI only simplifies the process further. According to Matthew Wright, Chair of Computer Science at the Rochester Institute of Technology, “They [con artists] don’t need very much of the voice to make a pretty good reproduction.”

In fact, Meta’s Voicebox has the ability to generate audio from just a 2-second sample. This opens the world up to a wide array of risks and many different cases of fraud.

New: we proved it could be done. I used an AI replica of my voice to break into my bank account. The AI tricked the bank into thinking it was talking to me. Could access my balances, transactions, etc. Shatters the idea that voice biometrics are foolproof https://t.co/YO6m8DIpqR pic.twitter.com/hsjHaKqu2E

— Joseph Cox (@josephfcox) February 23, 2023

Even with relatively rudimentary AI, Twitter user Joseph Cox was able to break into his own bank account by replicating his voice. There’s no telling what kind of scams could be pulled off with Meta’s Voicebox.

Related Articles

- Best AI Text Generators

- LLMs Label Data as Well as Humans but 20x Faster – Will the Next Generation of AIs Train Themselves?

- UK Approves Amazon’s $1.7 Billion Acquisition of iRobot – Privacy Experts Are Already Sounding Alarms

What's the Best Crypto to Buy Now?

- B2C Listed the Top Rated Cryptocurrencies for 2023

- Get Early Access to Presales & Private Sales

- KYC Verified & Audited, Public Teams

- Most Voted for Tokens on CoinSniper

- Upcoming Listings on Exchanges, NFT Drops