The Federal Bureau of Investigation has issued a public service announcement (PSA), warning that criminals are using deepfakes to target victims for extortion.

The FBI stated that criminals are altering photos or videos of individuals and turning them into explicit content for the purpose of harassing victims or sextortion schemes, the federal agency said in a Monday announcement.

The criminals use content manipulation technologies and services to exploit photos or videos captured from an individual’s social media account, open internet, or requested from the victim, into sexually themed images that appear true-to-life.

The malicious actors then circulate the images or videos on social media, public forums, or pornographic websites.

Many victims, including minors, are unaware that their images have been copied until it is brought to their attention by someone else.

“The FBI continues to receive reports from victims, including minor children and non-consenting adults, whose photos or videos were altered into explicit content,” the agency said.

According to the FBI, law enforcement agencies received over 7,000 reports of online extortion targeting minors in 2022.

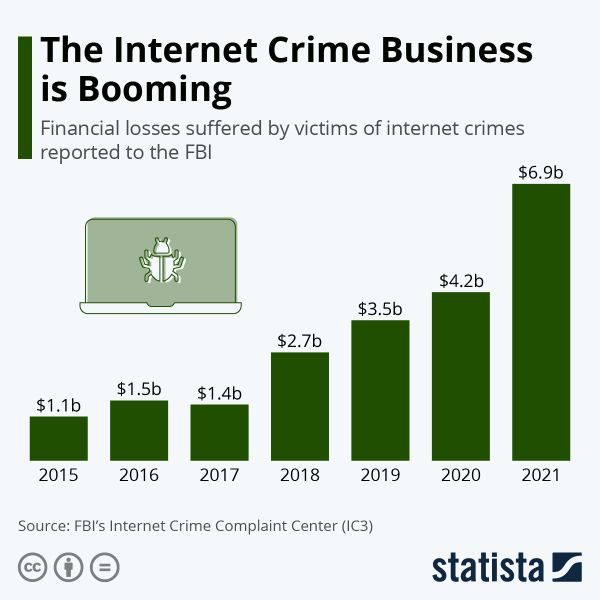

The warning comes as the internet crime business has been growing rapidly over the past couple of years.

Deepfakes Become More Prevalent Thanks to AI

The use of deepfakes to create synthetic content is becoming increasingly common due to the improvements in the quality, customizability, and accessibility of artificial intelligence-enabled content creation.

Specifically, generative AI platforms like Midjourney 5.1 and OpenAI’s DALL-E 2 have made it substantially easy to create videos featuring false events.

There have been instances where deepfakes have been used maliciously, such as in May, when a deepfake of Tesla and Twitter CEO Elon Musk was created to scam crypto investors.

The video shared on social media contained footage of Musk from past interviews, manipulated to fit the fraudulent scheme.

AI deepfake technology has really outpaced nation-states. Here's an example of an AI-generated audio of a supposed speech made by China's foreign secretary Qin Gang. Nearly perfect, except for the lack of ambient audience sound & that bit on Russia. pic.twitter.com/VnBHP4MR0J

— Senzaltro Otravia (@SOtravia) June 5, 2023

However, not all deepfakes are created with bad intentions. For one, a video of Pope Francis wearing a white Balenciaga jacket was created for entertainment purposes that went viral earlier this year.

FBI Recommendations to Combat Deepfakes

The FBI recommended that parents monitor their children’s online activity, discuss the risks associated with sharing personal content, and use discretion when posting images, videos, and personal content online, particularly those that include children or their information.

Individuals should also run frequent online searches of themselves and their children’s information to help identify the exposure and spread of personal information on the internet.

Additionally, individuals should apply privacy settings on social media accounts to limit the public exposure of their photos, videos, and other personal information.

The FBI urged the public to exercise caution when posting or direct messaging personal photos, videos, and identifying information on social media, dating apps, and other online sites as the images and videos can provide malicious actors an abundant supply of content to exploit for criminal activity.

The use of deepfakes to target victims for extortion is a growing concern, and individuals must take steps to protect their personal information and prevent themselves from being a victim of these scams.

Aside from the FBI, other government agencies have also issued a warning regarding the potential misuse of AI tools.

In a consumer alert in March, the US Federal Trade Commission warned that criminals have been using deepfakes to trick unsuspecting victims into sending money after creating an audio deepfake of a friend or family member that says they have been kidnapped. It said:

“Artificial intelligence is no longer a far-fetched idea out of a sci-fi movie. We’re living with it, here and now. A scammer could use AI to clone the voice of your loved one.”

Read More:

- 11 Best AI Text Generators in 2023

- 13 Best AI Essay Writing Tools for 2023

- 16 Best AI Writer Tools for 2023

What's the Best Crypto to Buy Now?

- B2C Listed the Top Rated Cryptocurrencies for 2023

- Get Early Access to Presales & Private Sales

- KYC Verified & Audited, Public Teams

- Most Voted for Tokens on CoinSniper

- Upcoming Listings on Exchanges, NFT Drops