Nvidia, the developer of the first GPU, has introduced a new software it claims has the ability to solve one of ChatGPT’s biggest problems, hallucination. This software named NeMo Guardrails could potentially prevent AI models from presenting false information, discussing hazardous topics, or exploiting security flaws.

Software Helps Developers Add Guardrails to AI Chatbots

Amidst the global excitement over generative Artificial Intelligence(AI), chatbots built from Large Language Models(LLMs) are becoming increasingly common led by the famous ChatGPT.

These models are trained using terabytes of data to develop software that can provide text responses that seem like they were written by a human.

However, most of these LLMs face among other major problems, the issue of making things up and stating false information, which repels businesses as they are considered unsafe and insecure.

To help prevent this, Nvidia developed and released open-source software to help keep such models in line by guiding them away from topics they should not be addressing. As such, the models only offer information that abides by the safety and security requirements of the enterprise for which they are created.

According to Nvidia, NeMo Guardrails is meant to help “developers guide generative AI applications to create impressive text responses that stay on track” while ensuring smart applications powered by LLMs are “accurate, appropriate, on topic and secure.”

“Today’s release comes as many industries are adopting LLMs, the powerful engines behind these AI apps. They’re answering customers’ questions, summarizing lengthy documents, even writing software, and accelerating drug design. NeMo Guardrails is designed to help users keep this new class of AI-powered applications safe,” said Nvidia in a blog post.

The software can stop the model from displaying harmful content, force an LLM chatbot to discuss a particular subject and stop LLM systems from running dangerous commands on a computer.

“You can write a script that says, if someone talks about this topic, no matter what, respond this way,” said Jonathan Cohen, Nvidia vice president of applied research. “You don’t have to trust that a language model will follow a prompt or follow your instructions. It’s actually hard coded in the execution logic of the guardrail system what will happen.”

The release of this software also emphasizes Nvidia’s plan to maintain its dominance in the market for AI hardware while also creating essential machine learning tools.

Three Types of Boundaries

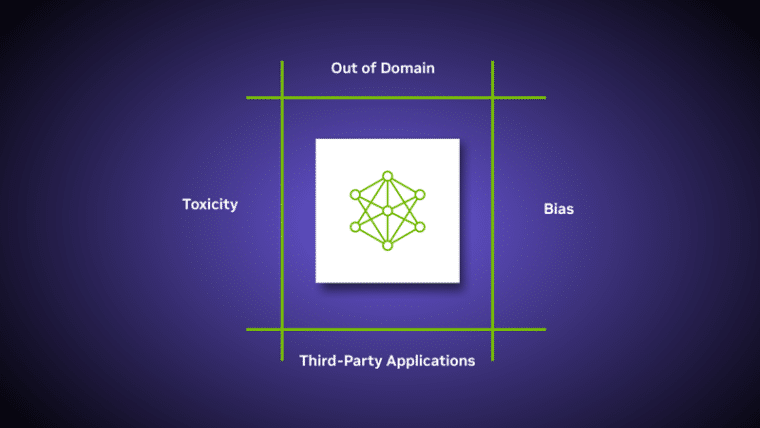

The NeMo Guardrails software will function as a barrier between the user and any other AI models or LLM, stopping any undesirable outcomes or prompts from a model before it displays its response to the user.

With this software, developers are able to set up three types of boundaries depending on their business needs. The first kind is topical guardrails which prevent apps from deviating into inappropriate topics.

“If you have a customer service chatbot, designed to talk about your products, you probably don’t want it to answer questions about our competitors. You want to monitor the conversation. And if that happens, you steer the conversation back to the topics you prefer,” explained Cohen.

The second type is safety guardrails which make sure applications only respond with relevant and correct information by weeding out offensive language and enforcing that only reliable sources are cited.

Lastly, the security guardrails restrict apps from making connections to unsafe or harmful external third-party applications.

Aside from that, by requesting a second LLM to fact-check the first LLM’s response, NeMo is able to detect hallucinations. If the second model does not produce matching results, the first model simply responds with “I don’t know”.

NeMo Guardrails can be used in commercial applications and is available through Nvidia services. According to Nvidia, programmers will create their own rules for the AI model using the Colang programming language.

Given its ease of usage, compatibility with a wide range of LLM-enabled programs, and compatibility with all the tools enterprise app developers use, such LangChain, Nvidia asserts that almost all software developers will be able to use NeMo Guardrails.

As Nvidia introduces NeMo, investors may want to diversify their portfolios with AiDoge, an exciting crypto presale that blends the dynamic world of meme culture with cutting-edge artificial intelligence (AI) technology and cryptocurrency.

The platform allows users to create, share, and engage in discussions about memes while benefiting from the comprehensive AiDoge ecosystem fueled by the native Ai token.

Currently, investors have the opportunity to acquire $Ai tokens for just $0.000026 USDT and enjoy the journey as its value grows throughout each presale stage.

Related articles:

- YouTube Revenue is Down This Quarter Yet Again – How Can Google Turn it Around?

- Instagram Keeps Dominating the Influencer Marketing Space in 2023

- Internet Sleuths May Have Tracked Down Elon Musk’s Burner Account

What's the Best Crypto to Buy Now?

- B2C Listed the Top Rated Cryptocurrencies for 2023

- Get Early Access to Presales & Private Sales

- KYC Verified & Audited, Public Teams

- Most Voted for Tokens on CoinSniper

- Upcoming Listings on Exchanges, NFT Drops