DC/OS stands for Data Center Operating System. Essentially, it allows you to manage multiple machines or nodes as if they were a single pool of resources. Unlike traditional operating systems, DC/OS spans multiple machines within a network, aggregating their resources to maximize utilization by distributed applications. Think of DC/OS as an Operating System like CentOS, RHEL or Ubuntu. But DC/OS goes beyond those OSes by using a unified API to manage multiple systems such as containers and distributed services, in the cloud or on-premises. It automates resource management, schedules process placement, facilitates inter-process communication, and simplifies the installation and management of distributed services. DC/OS has a web interface and a command-line interface (CLI) facilitating remote management and monitoring of the cluster and its services. There are two types of DC/OS, an enterprise version which includes advanced features for security, compliance, multitenancy, networking and storage, and is backed by 24×7, SLA-governed support. And there is an open source, distributed operating system based on the Apache Mesos distributed systems kernel. DC/OS manages multiple machines in the cloud or on-premises from a single interface. Open source DC/OS also adds service discovery, a Universe package for different frameworks, CLI and GUI support for management and volume support for persistent storage.

Benefits

- It utilizes the maximum cluster resources available.

- It provides easy-to-use container orchestration right out of the box.

- It makes it possible to configure multiple resource isolation zones.

- It gives your services multiple persistent and ephemeral storage options.

- It makes it easy to install both public community and private proprietary packaged services.

- It makes it easy to install DC/OS on any cluster of physical or virtual machines (Cloud or on-prem).

- The DC/OS web and command line interfaces make it easy to monitor and manage the cluster and its services.

- It gives you the power to easily scale services up and down with the turn of a dial.

- It is highly available and makes it easy for your services to be highly available too.

- It provides automation for updating services and systems with zero downtime.

- It includes several options for automating service discovery and load balancing.

- It allows network isolation of virtual network subnets.

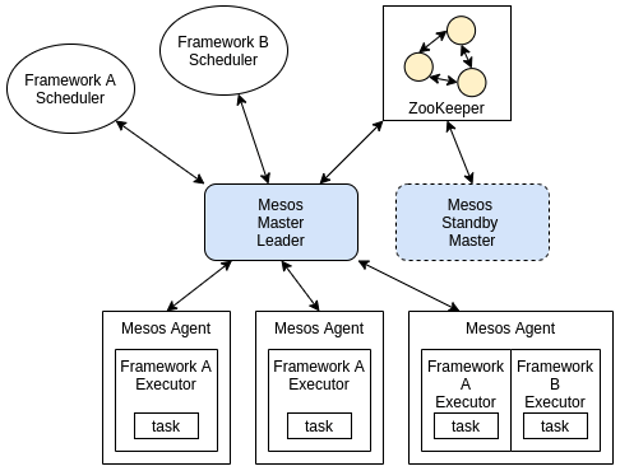

What is Mesos?

DC/OS is built on top of Apache Mesos, the open source distributed orchestrator for container as well as non-container workloads. It is a cluster manager that simplifies the complexity of running applications on a shared pool of servers and is responsible for sharing resources across the application framework by using a scheduler and executor. You can think of Mesos as being like a Linux kernel.

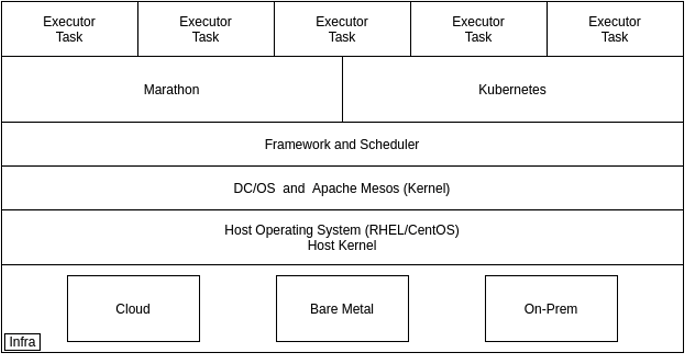

DC/OS Architecture Layer

Software Layer

- DC/OS provides package management and a package repository to install and manage several types of services.

Platform Layer

- This provides Cluster Management, Container Orchestration, Container Runtimes, Logging and Metrics, Networking, Package Management and Storage.

DC/OS Node type

- DC/OS bootstrap node – A bootstrap machine is the machine on which the DC/OS installer artifacts are configured, built, and distributed.

- DC/OS Master nodes – A DC/OS master node is a virtual or physical machine that runs a collection of DC/OS components that work together to manage the rest of the cluster.

- DC/OS Public Agent node – A public agent node is an agent node that is on a network that allows access from outside of the cluster via the cluster’s infrastructure networking.

- DC/OS Private Agent node – A private agent node is an agent node that is on a network that does not allow* access from outside of the cluster via the cluster’s infrastructure networking.

Infrastructure layer

- DC/OS runs on host operating systems like CentOS, RHEL and CoreOS. It can be deployed on-prem as well as in the cloud. For both on-prem and cloud deployments, there is a universal installer called Terraform.

- DC/OS also comes with an e2e-test variant which supports Docker, AWS and Vagrent based test clusters. It uses Calico as an open-source networking and network security solution for containers, virtual machines, and native host-based workloads.

- DC/OS by default also comes with Marathon to manage container and microservices deployments.

Functionalities and Components of DC/OS – Marathon

Cluster Manager – Master and agent node/task management and execution

Container platform

- Task scheduling with Marathon/Metronome

- Runtimes – Docker and Mesos

- Jobs and Services

- Custom scheduler

Operating system

- Abstracts cluster hardware and software

- Scale up to thousands of nodes.

- System space or kernel space

- User space or applications, jobs and service space

- Not a host OS (Require RHEL/CentOS)

Two-Level Scheduling

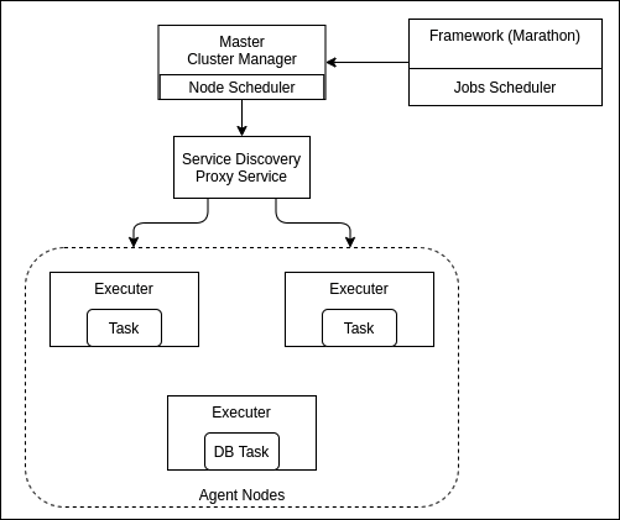

DC/OS manages this problem by separating resource management from task scheduling. Mesos manages CPU, memory, disk, and GPU resources. Task placement is delegated to higher level schedulers that are more aware of their task’s specific requirements and constraints. This model, known as two-level scheduling, enables multiple workloads to be collocated efficiently.

Marathon Framework

Marathon act as a core component, giving you a production-grade, well-tested scheduler that is capable of orchestrating both containerized and non-containerized workloads. With Marathon, you have the ability to reach an extreme scale, scheduling tens of thousands of tasks across thousands of nodes. You can use highly configurable declarative application definitions to enforce advanced placement constraints with node, cluster, and grouping affinities.

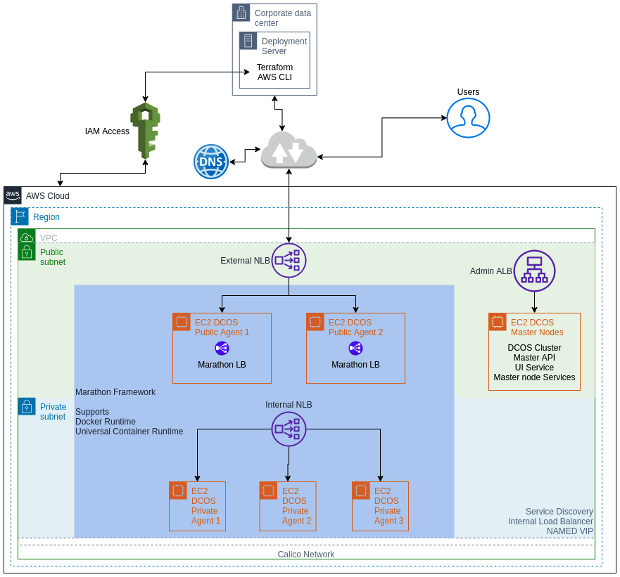

Launch on AWS Cloud Platform

For the DC/OS cluster we are going to use an AWS EC2 instance to create and manage nodes. The diagram below shows what this architecture looks like.

We will use the Terraform module to create and maintain the infrastructure components. Firstly, we set up Terraform in the system where we are going to deploy the DC/OS cluster. On-prem and other cloud installation steps can be found here (https://docs.d2iq.com/mesosphere/dcos/2.1/installing/).

In this setup we are going to use AWS cloud platform and DCOS universal installer 0.3 for DCOS Version: 2.1

Prerequisites

- Generate SSH key pair for instance SSH level access.

Terraform config require to generate ssh key before deployment. We need to make sure we have the public key generated at “ssh_public_key_file”.

- Command line enabled Linux, macOS or Windows from which we are going to execute universal installer 0.3.

- AWS CLI with IAM account (Access key and Secret key) with EC2, VPC, S3 and IAM few basic permissions.

- Make sure we have Terraform installed with the latest stable release.

As we are using open-source version of DC/OS, we do not need a licence key. Now, we can proceed to deployment.

Deploy DC/OS Cluster

Create Demo directory.

Create the Terraform file with a name that suits your deployment. In this example, we are going to create a file called dcos.tf. Make sure the file ends with the extension .tf.

File: dcos.tf

Via this Terraform template we are going to launch cluster with 1 master node, 2 private node, 1 public node and 1 default bootstrap node.

This setup can be accessible via “whatismyip” IP address.

After, the installation cluster can be managed through UI, CLI and API. The cluster will generate:

EC2 Instances:

1 Bootstrap node – bootstrap nodes according to the role (Ansible).

N Master nodes – to manage the cluster API and resources.

N Public agent node – to sit behind the external load-balancer

N Private agent node – to sit behind the internal load-balancer

ELB:

1 Admin DC/OS UI load-balancer (ALB)

1 External public facing load-balancer – you can map your custom DNS here. (NLB)

1 Internal load-balancer for internal use, within VPC. (NLB)

Security Group to manage traffic. Specifically, Admin LB access control through white-listed IP addresses.

DC/OS UI Login:

Open the Admin LB endpoint which we got from the output of universal installer (Terraform).

First, login with an Open ID provider. (Google/Microsoft account)

Some default useful URLs:

Default services and features:

DC/OS by default comes with Marathon installed to manage stateless long running applications.

Marathon supports Docker and Universal Container Runtime.

DC/OS also provides non-container application execution as Jobs by using the Metronome framework. You can directly execute binary or packaged executables by supplying simple commands.

Configure CLI

Installation instructions can be found by clicking on “cluster-name” in the upper-right corner of the DC/OS UI.

Sample steps for Linux:

CLI commands:

Sample application file: app.json

Check in the UI “Services” Ttb and explore the service management operations like start, stop, scale, terminate, configuration history, logs, etc.

Note that Service and Group support “Quota”. With this we can define the CPU and memory resource allocation quota.

Service Discovery:

Service discovery enables you to address applications independently of where they are running in the cluster, which is particularly useful in cases where applications may fail and be restarted on a different host.

DC/OS provides two options for service discovery:

Mesos-DNS:

Resolve internal service host and port information through SRV record lookup.

Example: dig srv _appname._tcp.marathon.mesos

Named Virtual IPs:

Allows you to assign name/port pairs to your apps, which means you can give your apps meaningful names with a predictable port, by adding the following to the package definition (json).

Catalog (Default repo):

From the DC/OS UI navigate to the “Catalog” tab.

DC/OS provides a large variety of pre-defined and ready to use tools through “Catalog”, including packages like Jenkins, Prometheus, Grafana, mongodb, Redis and openvpn.

As our application is deployed on DC/OS-Marathon framework, we will make our frontend service web accessible through a public load-balancer.

Marathon-LB:

We will use Marathon-LB, a Python-based utility backed with HA-Proxy.

Search for “marathon-lb” and select it from the community package. Install it with the default configurations.

By default, Marathon-LB attaches to the external load-balancer and deploys itself in the public agent nodes.

Define the HA-Proxy labels (Key:Value) to the application through the json manifest.

After deploying the application with above configuration, map your DNS records to the external load-balancer CNAME.

Please refer to the official doc for more information:

https://docs.d2iq.com/mesosphere/dcos/services/marathon-lb/1.14/mlb-reference/

User Management

External user accounts: External user accounts exist for users who want to use their Google, GitHub, or Microsoft credentials. DC/OS never receives or stores the passwords of external users.

Local user accounts: Local user accounts exist for users who want to create a user account within DC/OS. Usernames and password hashes are stored in IAM database.

Service accounts: A machine interacting with DC/OS should always go through a service account login for obtaining an authentication token. Do not use a username/password-based login in that case.

Let’s add a local user with a simple username and password.

Auto-Scaling of Marathon Apps

To install the application auto-scaler, navigate to the “Catalog”, then search for and select “marathon-autoscaler”.

In the service configuration of “Autoscaler” define “Marathon App” with a specific ID like “/example/appid” and define “Userid” and “Password” which we created in the previous step.

Deploy the autoscaler for that specific Marathon application.

For more details refer to: https://github.com/mesosphere/marathon-autoscale

Metrics

Types of metrics:

- System: Metrics about each node in the DC/OS cluster.

- Component: Metrics about the components which make up DC/OS.

- Container: Metrics about cgroup allocations from tasks running in the DC/OS Universal Container Runtime or Docker Engine runtime.

- Application: Metrics emitted from any application running on the Universal Container Runtime.

Custom metrics to the DC/OS metrics service from the application can be exported via injecting the standard environment variables STATSD_UDP_HOST and STATSD_UDP_PORT. The application can use this and export metrics.

Alternatively, we can serve metrics in Prometheus format by exposing an according endpoint. We need to add at least one port with the label.

DCOS_METRICS_FORMAT=prometheus.

Example: “labels”: { “DCOS_METRICS_FORMAT”: “prometheus” }

CLI to test metrics:

Note: Metrics in DC/OS 1.12 and newer versions use Telegraf to collect and process data. Telegraf provides a plugin-driven architecture.

DCOS Monitoring Plugin

From the UI “Catalog” tab search and install “dcos-monitoring”, which includes Prometheus agent/server and Grafana.

Access URL for Monitoring:

For more details refer to: https://docs.d2iq.com/mesosphere/dcos/2.1/metrics/

If you have a question about DCOS deployment on AWS Cloud, be sure to let us know by getting in touch using the form below.