With ingenious capacities to analyze vast amounts of data and deduce patterns, A.I is poised to disrupt one of the world’s data-hungriest industries. The shortfall of data recognition proficiency of traditional web scraping bots is one of its major drawbacks.

This shortfall is critical, especially when scraping a vast range of websites with unique layouts and content architectures. This problem becomes more pronounced when the websites used as data sources undergo upgrades that alter their layouts significantly.

To get around this problem, a business might need to limit its web scraping campaigns to websites with identical layouts. And even such a list gets pared down whenever any site undergoes layout changes.

Besides helping extract data from myriads of dynamic websites, A.I promises to revolutionize how developers execute each step of the web scraping process, from choosing the right data pipelines to cleaning the data, and then categorizing them.

With A.I web scraping, businesses can better handle heavy data syndication from a variety of sources. This can bring about a huge productivity boost for enterprise management activities. You can leverage it to enhance the outcomes of activities like supply chain analytics, labor research, market research, and sentiment analysis for new products.

If you’re undecided about what A.I-based web scraping to use, you can learn easily from case studies. I’ve put together three insightful case studies of how enterprises put A.I to work in their web scraping processes.

Why A.I is Becoming a Master Trend in the Web Scraping Industry

Caption: A.I is disrupting the web scarping industry in more ways than one

Source: https://www.statworx.com/at/blog/web-scraping-101-in-python-with-requests-beautifulsoup/

There’s never been a better time than now to join the A.I web-scraping bandwagon. The cost of computing is on the decline and A.I-enabling hardware such as NVIDIA GPUs are becoming increasingly ubiquitous. As these technologies are fast-maturing, it’s becoming easier for businesses to deploy A.I in web scraping to gain a competitive edge.

While tech giants like Google, Microsoft, IBM, and Salesforce were the first to adopt A.I for web scraping, the required technologies are fast coming within the reach of businesses across the board. Small businesses are now finding it more viable to hire A.I developers to enhance the outcome of their web scraping processes with customized solutions.

From sales to marketing, IT, HR, customer service, A.I-based web scraping technologies can enhance the efficiency of operations in various business departments.

Here’s how the technology can help businesses gain a competitive edge.

Warp-Speed Data Collection

Some businesses can fetch all the information they need from the web for their business intelligence from just a handful of websites. But most businesses who need to scrape the web must scour through hundreds – sometimes even hundreds of thousands – of websites. Keeping up with such web scraping needs at a good speed can be as challenging as finding needles in haystacks of data.

A.I significantly fast-tracks the data collection phase of web scraping by enabling the speedy identification and classification of relevant data sources.

Collecting Larger Volumes of Data

With greater data collection speeds, A.I web scraping technologies allow businesses to scrape more websites in a round. More information is likely to result in greater insights and, ultimately, a greater value from the web scraping processes for a business.

Enhanced Accuracy

Perhaps the best selling point of A.I-enabled web scraping is the capacity to deduce patterns with greater accuracy. Businesses can process and analyze much larger batches of data per time with much greater accuracy of pattern deduction. This ultimately culminates in more value and a greater competitive edge.

Now, with these benefits in mind, let’s see A.I-based web scraping in action in three illuminating use cases.

A.I Web Scraper from MIT Researchers

Caption: Researchers at MIT have developed an AI. web scraping program that teaches itself to make better decisions with information from the web

Source: https://towardsai.net/p/category/web-scraping

Scientists at the Massachusetts Institute of Technology have published a paper describing an innovative A.I-powered web extraction program. The hallmark of the system is its capacity to teach itself to extract valuable information from the web.

Unlike traditional web scraping technologies, the MIT researcher’s A.I system doesn’t extract data by mechanistically applying preset mathematical rules. Rather, when faced with unstructured data that doesn’t fit into any prefixed processing rules, the program takes on a dynamic approach to scour the web for more information that could help it make the right calls.

“(Traditional) information extraction (technologies)… are very different from what you or I would do,” said professor Regina Barzilay a senior researcher on the team. “When you’re reading an article that you can’t understand, you’re going to go on the web and find one that you can understand.”

The researcher’s technology acts and reacts like humans, rather than a machine, when faced with anomalies while extracting data. And it does so at scale.

The most crucial path-breaking factor of the new technology is its ability to teach itself with fewer human-thought examples. While traditional machine-learning models would require several examples based on a very narrow set of parameters, the new A.I data extractor requires very little instructional data. This is thanks to an algorithm that goes out of its way to search for information to fill the gaps.

Another feature that’s key but isn’t exclusive is the “confidence score” feature, which indicates the level of certainty of the program’s predictions. By comparing the formulation of the predictions it makes when self-learning with those made when thought with human intervention, the program can determine how close it’s come to predicting correctly.

And if the confidence score doesn’t reach a certain threshold, the program reverts to research mode to feed itself with more relevant information that could improve the prediction accuracy. This cycle will go on and on until the program ramps up its confidence score to – or even beyond – an acceptable threshold.

“We used a technique called reinforcement learning, whereby a system learns through the notion of reward,” revealed Karthik Narasimhan, a graduate student on the team, during an interview with Digital Trends.

The said technique refers to what’s been dubbed a deep-Q network (DQN), a system “trained to optimize a reward function that reflects extraction accuracy while penalizing extra effort.” With this technique, the researchers can drive the machine’s effort in the right direction while deterring it from wasteful efforts.

“Because there is a lot of uncertainty in the data being merged — particularly where there is contrasting information — we give it rewards based on the accuracy of the data extraction. By performing this action on the training data we provided, the system learns to be able to merge different predictions in an optimal manner, so we can get the accurate answers we seek.”

The researchers believe their breakthrough technology will be disruptive to web scraping across industries, from healthcare to manufacturing, auto, and digital marketing. The system’s prudent self-learning algorithm can enhance research efforts in a multiplicity of ways. It can cut down tedious research work significantly, while also guaranteeing greater accuracy of results.

A.I Web Scraping Tool for The Agricultural Markets Information System (AMIS)

With this A.I web scraping technology, the creators managed to fine-tune the data recognition proficiency of automated web scarping to near-human levels.

They’ve put together a post-extraction machine learning algorithm that picks out and categorizes similar elements across a vast collection of dynamic websites.

During a demonstration of this data-sorting proficiency in an A.I web scraping service delivery to The Agricultural Markets Information System (AMIS), the creators tasked the machine with collecting the prices of four foods vis-a-vis maize, rice, soybeans, and wheat from three different dynamic websites.

Caption: A screenshot of the first stage of the A.I-based web scraping process for AMIS

Source: https://www.researchgate.net/figure/fig3_322520038

First, the program performed the basic housekeeping to sort data from various unique sources into distinct categories. Then came the more complex part: keeping the database constantly updated regardless of changes in the layouts of data sources.

At this point, it would take human judgment to sort through the dynamic sites. But the machine surpasses human accuracy and at a much larger scale and faster pace, thanks to its ingenious “intelligent metasearch engine 4”.

Human researchers hardly reach for source sans web search engines. But this piece of algorithm augments its data sources with a host of other platforms than search engines. It also probes for data contained not only in typical HTML web pages but also in other media files such as Excel files, PDF files, PowerPoint Presentations, etc.

The algorithm also edges human accuracy thanks to its capacity to deduce the most appropriate search terms for each query. After the search, the algorithm then analyzes and categorizes the extracted data based on pre-established user preferences.

Each stage of the whole process, including human interfacing, data extraction, data analysis, and organization feeds into each other. For instance, at the end of each search iteration, the user can gauge the accuracy of the results and provide feedback that the machine can then use to tweak its search terms to reach for more plausible data sources.

The algorithm mimics human efforts by concurrently altering the search terms based on the user’s feedback with each data extraction cycle. In the food prices use case, the machine probed for search terms other than those that include “price” like “world cost of food” “world markets of maize, rice, soybeans”, etc.

The search terms containing “prices” guided the initial searches. And then when the user validated the most important types of data and data sources from the results, the algorithm figured out other search terms that are more likely to yield the desired data. The algorithm used these terms not only in the search queries, but also in the data analysis and categorization.

In most of the use cases of the technology, more accurate results begin to take shape from the second cycle, after users give their first set of feedback to the algorithm. The results of each itinerary can be saved for future references. This also makes it quite easy for users to prime the algorithm for new searches with inputs from the results of previous queries.

An A.I Web Scraping Python Tool for a Petroleum Product Marketer’s Gas Prices Database

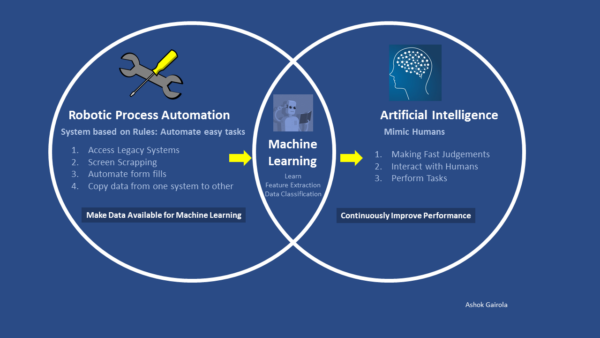

Caption: This case study entails the combination of RPA, Machine Learning and A.I

Source: https://medium.com/@ashok.gairola/robotic-process-automation-rpa-vs-artificial-intelligence-8af3c26803be

In this use case, Bo Tree Technologies, a web development company, was tasked with creating a dynamic database of real-time gas prices for a fast-growing independent petroleum product marketer. The database was meant to help the marketer’s customers find real-time prices of gas across gas stations in various cities, so they can determine where to buy at the cheapest prices. The data needed from web scraping included the names of their gas stations and their corresponding zip code and category (regular, premium, etc.)

As is the problem with most traditional web scraping bots, the challenge here was automating the price updates even with changes in the layout of the data sources. It involved not only extracting data from dynamic sources but also converting the data into the right format that would allow for them to be uploaded quickly to the database. A viable solution would help free up countless working hours and boost employee’s overall productivity.

To scale this challenge, the developers deployed RPA technology and Python-based machine learning for the web scraping processes. One of the biggest leverages that AI-based RPA technology provides in this case is the capacity to interact with a vast range of applications and data through digital mediums like APIs, database connectors, website UIs, and many others.

The RPA and machine learning technologies were used to extract and organize data and also to build an API for implementing real-time price updates on the marketer’s database.

But though RPAs can automate interactions with various applications and data, it cannot easily process unstructured data or make sophisticated decisions beyond those based on simple preset rules. So while the RPA technology enables high-speed automated data extraction, the machine learning algorithm is deployed to organize the data.

During the successive itineraries, all the technologies combine efforts to complement each other throughout different stages of the process. For instance, the A.I technology helped hone the search queries used by the RPA technology to find and extract data, while the RPA also helped provide the A.I technology with more relevant data that it can use to deduce the desired results based on the user’s preferences.

What’s Your Use-case of AI-Based Web Scraping?

Wondering which A.I-based tools to use to improve the accuracy of your data collection? Need faster turnaround times for your data collection? Want to improve personalization with your web scraping process? These case studies provide plenty of clues.

They demonstrate how A.I web scraping tools can be used to amp up the accuracy and reliability of your web scraping processes. A.I can be deployed to enhance the effectiveness of various steps of your web scraping process, from data extraction to analysis and storage.

A.I web scraping integrations can help eliminate costly human errors in predictions and also free up valuable working time. You can leverage these advantages to gain a competitive edge and also improve your bottom line.