As artificial intelligence (AI) moves from perceived threat to practical opportunity, organizations are making efforts to be more AI-first across the board. The ones winning at it are delivering AI-powered features and services that are so seamlessly integrated into our lives, we hardly even notice them.

Yet the ROI for many AI projects isn’t prolific. And the bigger story? AI is one of digital transformation’s key components. And if digital transformation is something businesses can’t afford to put off anymore, then neither is AI. It’s not a case of if, but when, which means it’s time for organizations to address the fundamental issues holding machine learning (ML) and AI projects back.

The solution: modern infrastructures and data storage solutions that enable the flexibility of hybrid cloud environments that legacy IT simply can’t provide.

DevOps, AI, and the Limitations of Legacy IT

It’s always interesting to me how most AI initiatives end up being discussions about humans. On the IT level, it’s no different. AI projects are often a story of two teams, two types of workflows, and two ways of getting things done—and how to bridge the gap between them.

Emily Potyraj, an AI Solutions Architect here at Pure, wrote an excellent article that explains this concept of model debt and friction between teams. She notes that IT teams are typically in charge of building out the infrastructure for AI teams, but these aren’t your typical software projects. The data pipelines required look a lot different than traditional software data pipelines. They can reside and move between on-premises, in hybrid clouds, or on the edge.

Trying to shoehorn new data initiatives into an old way of wrangling data can lead to issues:

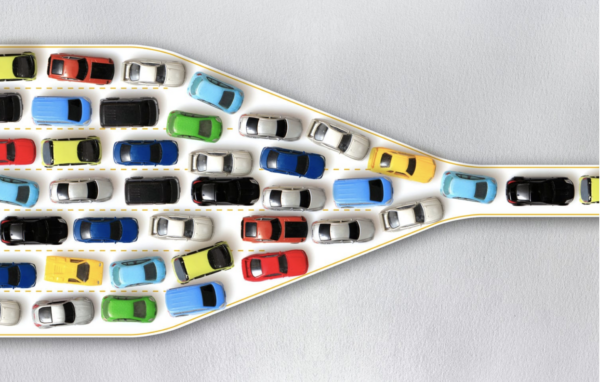

DevOps and AL/ML teams are wired differently. Data science teams rely on DevOps teams to “industrialize” their data pipelines, but DevOps can struggle to support them with legacy solutions. Data science teams need data mobility that can overwhelm DevOps’ plates.

Legacy IT infrastructures are too brittle for AI and ML at scale. Traditional IT infrastructures and data storage solutions aren’t well set up to handle AI and ML teams’ requirements. It’s software 1.0 machinery trying to power multicloud environments and software 2.0 initiatives.

Shadow projects lack access to the right resources. If an AI project sits outside of the data center, and outside of the greater IT org, it can set them up to struggle with limited access to shared services and resources.

AI/ML data gets locked down or siloed. If data is siloed or can’t be moved quickly enough for experimentation and inferencing between on-prem and the cloud, AI projects can stall out.

All of this begs the question: Why are so many organizations still trying to make AI and ML workflows work on traditional IT infrastructures?

Where Multicloud, DevOps, and Data Meet: “MLOps”

To merge the two successfully, a new IT discipline has emerged: “MLOps.” MLOps, or “AIOps,” is a modern mindset that’s all about making the architectural choices AI teams need to thrive. MLOps teams work well when they have access to a modern mix of technologies that make AI’s demands on data feasible.

At this juncture, there are a few modern infrastructure components that can better support the demands of AI data pipelines:

- Faster compute power and accelerated networking capabilities. Leveraging GPUs over CPUs can give projects the horsepower they need. Integrated hardware and software solutions like the AIRI (AI-ready infrastructure) address storage out of the box, without the need to rearchitect the data center. With Pure FlashBlade and NVIDIA DGX GPUs, models that took a week to train can now be trained in 58 minutes.

- Cloud-native file and object storage. Modern flash storage can remove tension and simplify how data moves between hybrid and multicloud environments, and snapshots can provide data teams with portable carbon copies of live data. Data-intensive applications like AI can require low latency, high-throughput data without compromising on compliance or data sovereignty. This webinar shares helpful tips to accelerate AI application delivery with AWS Outposts and fast flash storage.

- Multicloud, hybrid cloud, and container infrastructures. Going multicloud for AI, ML, and deep learning initiatives can give teams agility and “a plethora of choices” to pick and choose cloud services without vendor lock-in¹. One use case: Using on-prem for compliance, speed, and cost savings; and, leveraging AWS Outposts for the control plane so teams don’t have to manage every server, network, and application themselves. Pure Storage has support for Outposts on FlashBlade.

How Hybrid Cloud, Multicloud and Modern Storage Play in AI Success

Trifacta notes that “The benefits of the cloud are hard to overestimate in particular as it relates to the ability to quickly scale analytics and AI/ML initiatives.” Their reports reveal that 66% of respondents are running all or most of their AI/ML initiatives in the cloud. And, they can be highly mobile: data pipelines for AI workflows may move between cloud components that are colocated, local, or public, which makes an upgraded data storage solution critical.

Crater Labs, a software development company that leverages AI and ML research, is no stranger to ambitious projects. They ran into challenges trying to execute on legacy storage, including maxed-out storage, which left research teams waiting on experiments to run; a lack of flexibility when trying to manage data in a hybrid-cloud environment; and, the need to constantly shuffle data around.

To support the types of endeavors that let them “shoot for the moon” for their clients, Crater Labs transitioned from legacy storage to a modern environment with Pure Storage. Across all projects, Pure FlashBlade gives Crater Labs competitive edge, helping researchers easily move data and run multiple experiments simultaneously. The result: AI projects unhindered by resource constraints and data loss concerns.

Global Response, a leading business process outsourcing company serving brands such as Toyota and Lacoste turned to AI to develop a state-of-the-art contact center system. The goal: to deliver personalized customer experiences with real-time transcription and analysis of support calls. To make their solution a reality, they built an infrastructure powered by NVIDIA and Pure.

The Potential for AI Success at Scale

As AI and ML projects proliferate and mature, we’re getting a clearer picture of what works and what doesn’t. These projects are challenging and complex, but there’s immense potential for intelligent tech’s data and services within an organization—not just in the data science sandbox. A single data plane just might be the key to solving these challenges. We know it can streamline multicloud environments and create a standardized backend that makes moving data around more effortless.

CIO and CTOs have a huge opportunity here to future-proof their organizations with infrastructures that align with AI strategies. As Emily Potyraj so perfectly put it, “Only you and your executives can work through those hurdles.”

It starts with understanding the role of data in AI initiatives, and upgrading data storage in and strategic multicloud environments to match. From there, it’s all open roads.

Article originally published on PureStorage.com